Computer Science 3200, Fall '24

Course Diary

Copyright 2024 by H.T. Wareham, R. Campbell, and D. Churchill

All rights reserved

Week 1,

Week 2,

Week 3,

Week 4,

(In-class Exam #1 Notes),

Week 5,

Week 6,

Week 7,

Week 8,

Week 9,

(In-class Exam #2 Notes),

Week 10,

Week 11,

Week 12,

(Final Exam Notes),

Week 13,

(end of diary)

In the notes below, RN3, RN4, and DC3200 will refer

to Russell and Norvig (2010), Russell and Norvig (2022), and David

Churchill's COMP 3200 course notes for the Fall 2023 semester.

Thursday, September 5 (Lecture #1)

[DC3200, Lecture #1;

RN3, Chapter 1; Class Notes]

- Went over course outline.

- As this course is on algorithmic techniques for artificial

intelligence, let us first give brief overviews of intelligence,

artificial intelligence, and algorithms.

- What is intelligence?

- Intelligence is at its heart the ability to perform

particular tasks, e.g.,

- problem solving, e.g., playing complex games like

chess and Go, proving mathematical theorems,

logical inference.

- being creative, e.g., producing literature, music, or

art, doing research.

- using tools to perform tasks

- social interaction with others

- remembering / learning

- talking

- seeing

- Intelligence is typically associated with agents and the

behavior these agents exhibit when interacting with an

external environment (more on this in the next lecture).

- Intelligence has often been seen as anything humans do that

animals don't, e.g., proposed biological Kingdom-level class

Psychozoa for humans (Huxley (1957)); as evidence has

accumulated

of lower-level intelligence in animals, e.g., gorilla

communication by American Sign Language (ASL), counting

by crows, memory in bees, complex social interaction and

tool use by chimpanzees, intelligence has been

redefined in terms of complex high-level tasks, e.g.,

(1-2) above, to preserve human superiority.

- This view of intelligence as exclusively human (and

high-level adult

human at that) surfaces in what we find anomalous (and

funny), e.g., "Children / animals can't do that!"

- Example: Not the Nine O'Clock News (1980): Gerald the Gorilla

(Link)

- What is artificial intelligence (AI)?

- Mythology and history are filled with artificial creatures

that exhibited the appearance of (if not actual)

intelligence, e.g., the bronze giant Talos, Roger Bacon's

talking bronze head, European clock and musical automata,

Vaucanson's mechanical duck (1739).

- The term "artificial intelligence" was coined by John

McCarthy to describe the goal of a two-month

summer workshop held at Dartmouth College in 1956. AI

is essentially the implementation of intelligent abilities

by machines (in particular, electronic computers).

- Many AI application areas, e.g.,

- Problem solvers, e.g., General Problem Solver (GPS),

artificial theorem provers, chess and Go programs

(Deep Blue (1997); AlphaGo (2016)).

- Logical / probabilistic reasoning systems, e.g.,

expert systems, Bayesian networks.

- Generative AI, e.g., ChatGPT, DALI

- (Swarm) Robotics

- Machine learning

- Natural Language Processing (NLP)

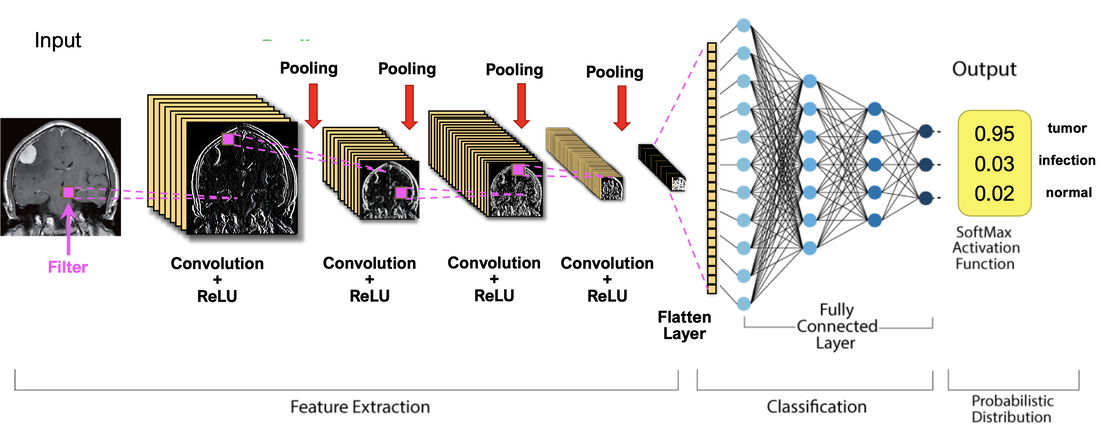

- Computer Vision

- Can distinguish types of AI based on mode of

implementation (weak / strong), implementation

mechanisms (symbolic / connectionist), and extent of

implemented ability (Artificial Specialized Intelligence

(ASI) / Artificial General Intelligence (AGI)).

- Strong AI uses computational approximation of human /

natural cognitive mechanisms; Weak AI uses any

possible computational mechanisms.

- Symbolic AI uses computer programs operating

within discrete-entity frameworks, e.g., logic;

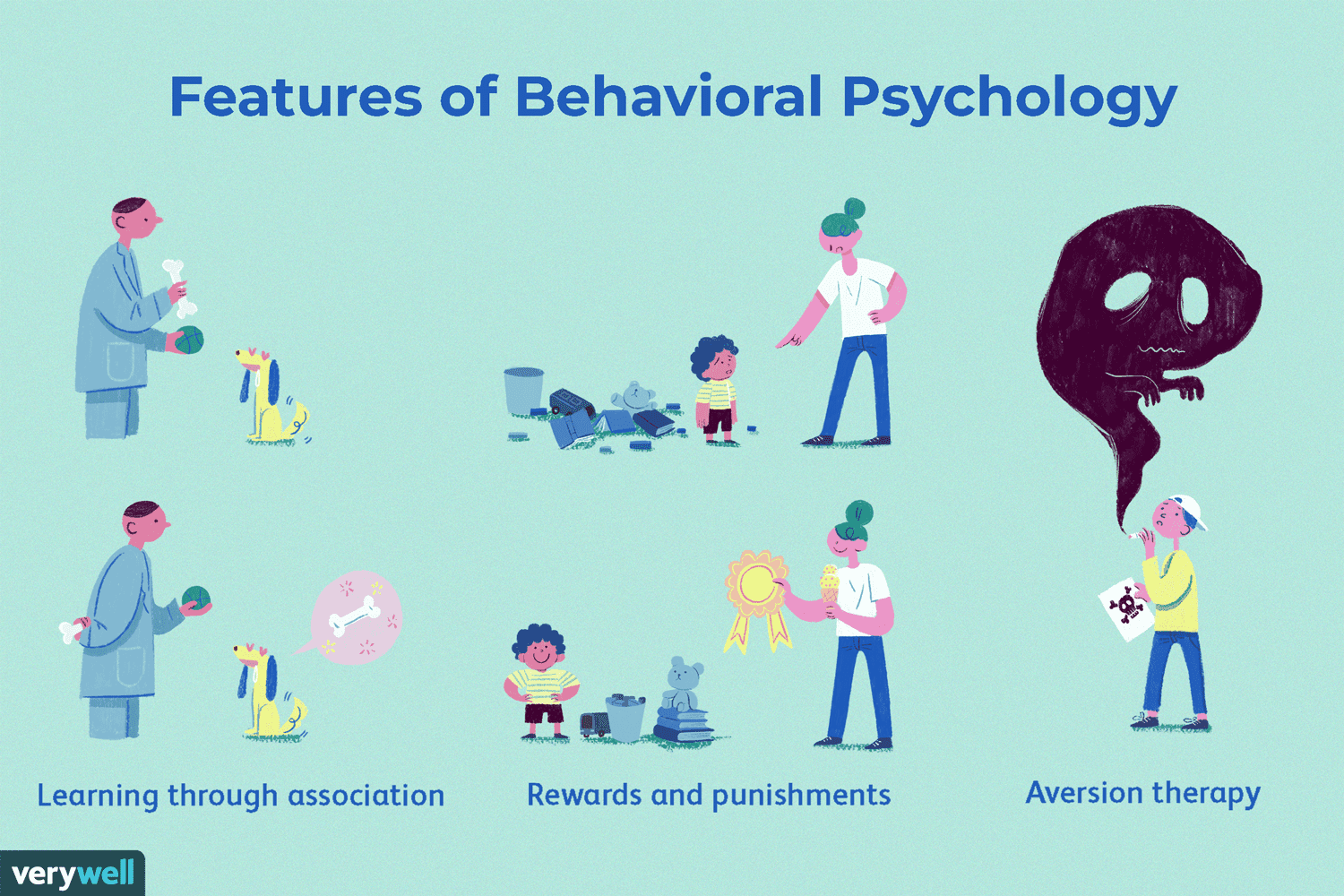

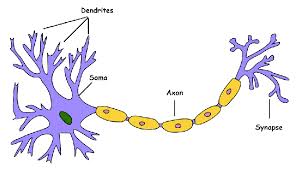

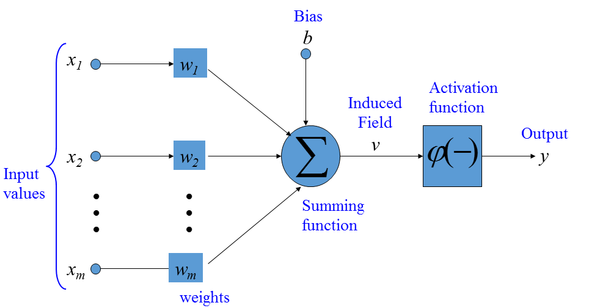

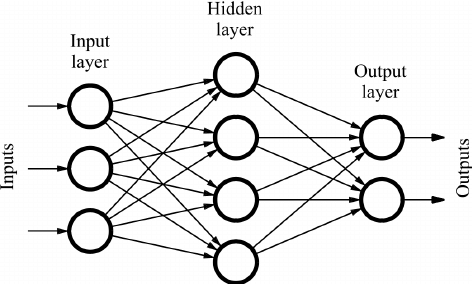

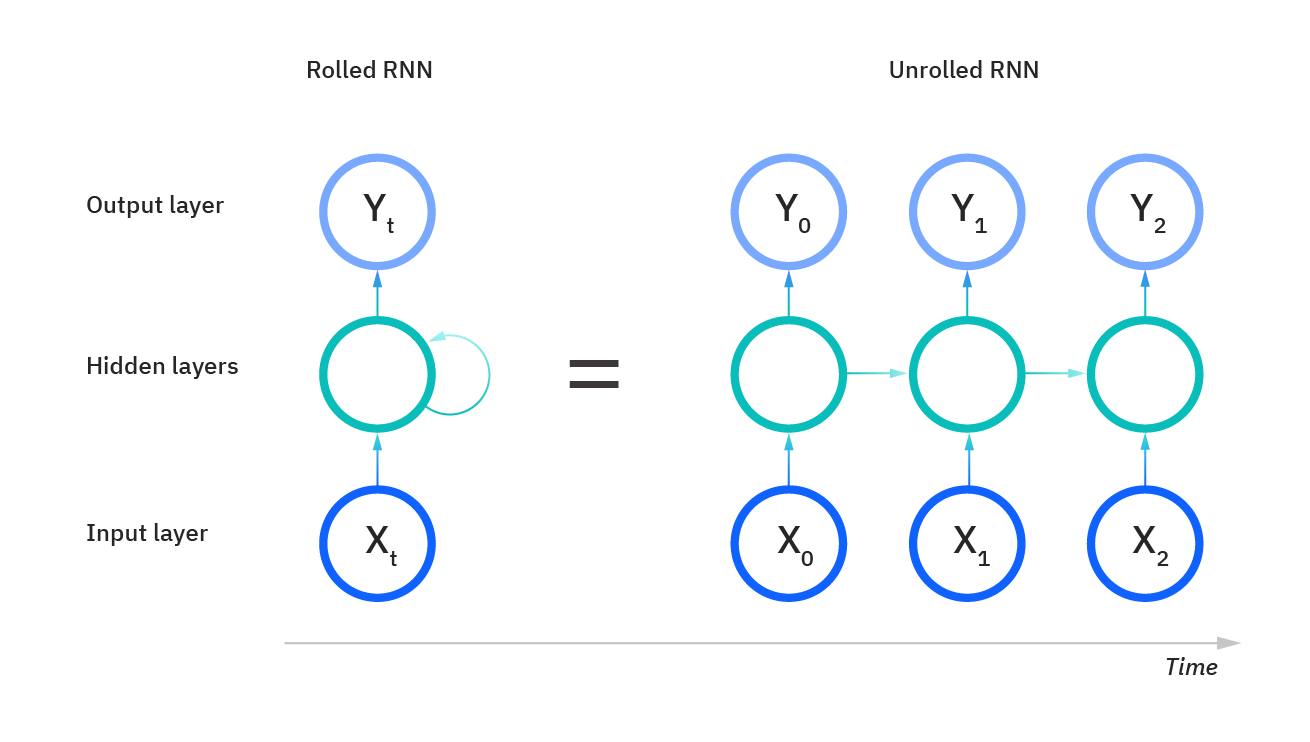

Connectionist AI uses approximations of biological

brain mechanisms, e.g., neural networks.

- ASI implements a single intelligent ability, e.g.,

recognizing faces, answering questions,

playing chess; AGI implements all intelligent abilities

performed by a human being.

- The initial focus of AI research was on strong symbolic ASI

for high-level intelligent abilities (tasks (1-2) above) with

the hope that developed ASI modules could be combined to

create AGI. However, given the difficulties of implementing

even supposedly low-level intelligent abilities (tasks

(3-7) above), let alone AGI, current research tends more to

weak connectionist ASI.

- Though it has gone through several "winters" in the last

65+ years (Haigh (2023a, 2023b, 2024a, 2024b, 2025)), AI is currently in

a boom period, with

weak connectionist systems exhibiting seemingly

human- (and sometimes trans-human-) level performance,

e.g., ChatGPT, medical image analysis.

- It is important to keep the distinctions made above in mind,

particularly between ASI and AGI; it is often in the

interests of researchers and corporations to claim

that their systems do far more they than they actually do,

and the resulting expectations can have embarrassing, e.g.,

automated machine (mis)translation, or even lethal, e.g.,

(non-)autonomous vehicles, consequences.

- What is an algorithm?

- Classical CS definition: Given a problem P which specifies

input-output pairings, an algorithm for P is a finite

sequence of instructions which, given an input I, specifies

how to compute that input's associated output in a finite

amount of time.

- This is the type of algorithm underlying symbolic GOFAI

(Good Old-Fashioned AI); such algorithms are both created

(hand-crafted) and understandable by humans.

- Many modern AI systems have broadened the term "algorithm" to

methods derived by machine learning systems that have been

trained on massive amounts of data; such algorithms are

machine-crafted and in many cases not easily understandable

by humans, e.g., BERTology = the field of research devoted

to trying to understand why the NLP BERT Large Language

Model produces the outputs that it does.

- It is critically important to keep the hand-crafted /

machine-crafted distinction in mind given emerging

challenges in real-world AI.

- In order for AI systems to operate in tandem with

humans or autonomously, these systems must be

trustworthy, i.e.,

- These systems are reliable, i.e., they produce

wanted behaviors consistently;

- These systems are fair, i.e., they do not encode

bias that compromises their behavior; and

- These systems are explainable, i.e., the reasons

why a system makes the decisions it does can be

recovered in an efficient manner.

While these properties can be ensured in hand-crafted,

algorithms, it is not yet known if this can be done

(even in principle, let alone efficiently (Buyl and De

Bie (2024); Adolfi et al (2024a, 2024b)) for machine-crafted

algorithms.

- AI is most certainly in an exciting yet unnerving state at present,

but if one can forego knee-jerk panic, there are

many opportunities for both applying existing and developing new

algorithmic techniques for AI.

- In this course, we will first look at symbolic hand-crafted

algorithmic techniques (search (single agent / multi-agent),

constraint satisfaction, logical reasoning, planning)

and then move on to symbolic and connectionist machine-crafted

techniques (evolutionary computation, reinforcement learning,

neural networks and deep learning).

- But before all that, next lecture, a closer look at agents and

environments -- see you then!

- Potential exam questions

Tuesday, September 10 (Lecture #2)

[DC3200, Lecture #2;

RN3, Chapter 2; Class Notes]

- Agents and Environments

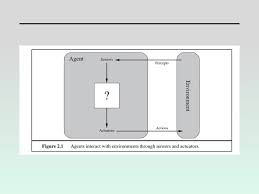

- Agents

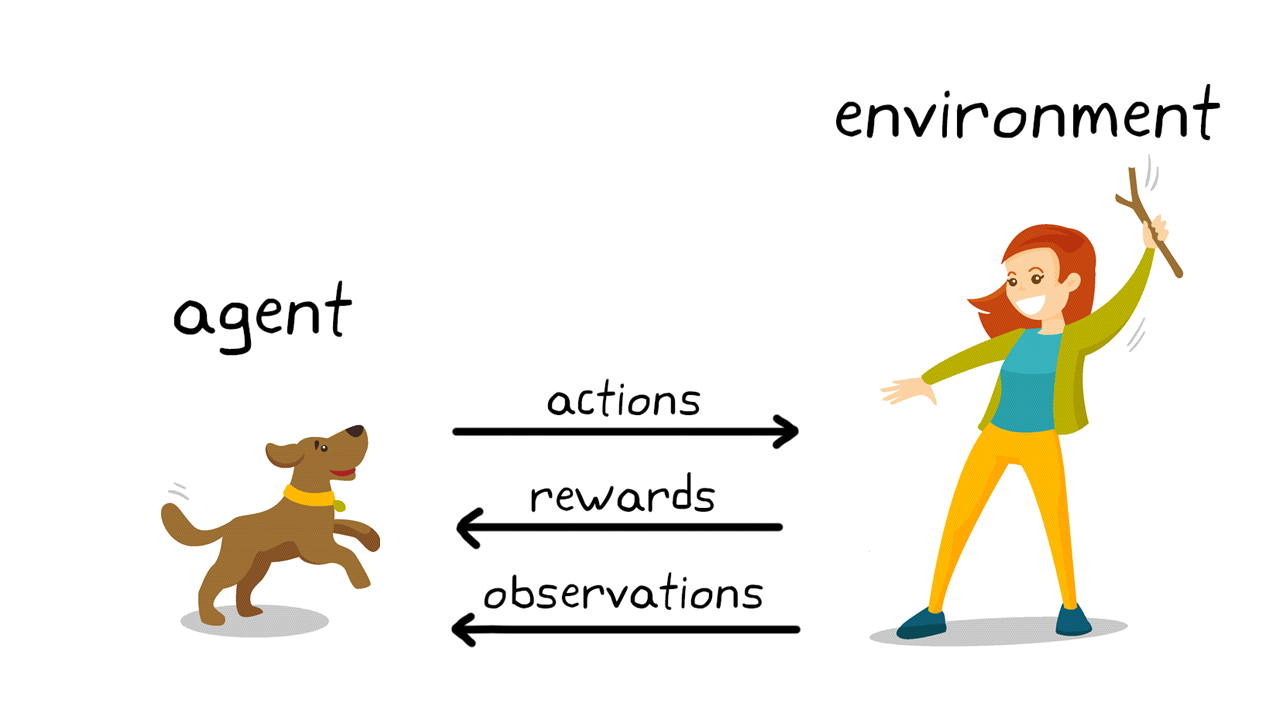

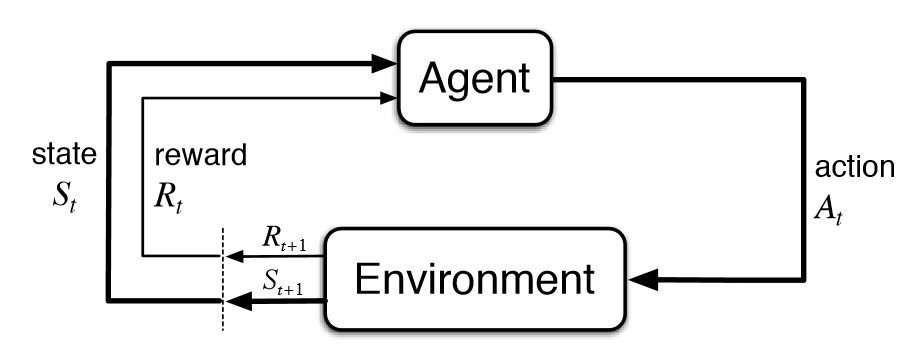

- An agent exists and performs intelligent behaviors,

e.g., makes decisions and takes actions, in a specific

environment and interacts with that environment via

a sense-understand-plan-act loop, i.e.,

- An agent perceives its environment through

sensors, e.g., camera, touch, taste, keyboard

input; these sensors provide percepts, which

form percept sequences over time.

- Agents act in their environment through

actuators, e.g., limbs, tools, allowable moves in

a game; actuators perform actions that can

modify the environment (including the agent's

internal state and/or position in the environment).

- An agent's choice of action is specified as an

agent function and implemented as an agent

program relative to a particular architecture.

- Agent functions are also known as policies

and there are various names for agent

programs depending on the application, e.g.,

controllers (robotics).

- In general, an agent's action-choice can

depend on its entire percept-history to

date; however, we will in this course

focus an agents whose action-choices

depend on the current percept.

- There are typically many ways to implement

an agent function, e.g., lookup tables,

IF-THEN statement blocks, neural networks.

- An agent may have knowledge about its environment,

e,g., environment model, game rules / allowable

moves.

- EXAMPLE: Some example agents:

- Roomba vacuum cleaner

- Human players in a game of chess

- Self-driving vehicle

- AI personal assistant

What are the sensors, percepts, actuators, actions, and

knowledge (if any) associated with each of these agents?

- An agent may have multiple internal states, with

percept / action pairs between these states

encoded in a state-transition function.

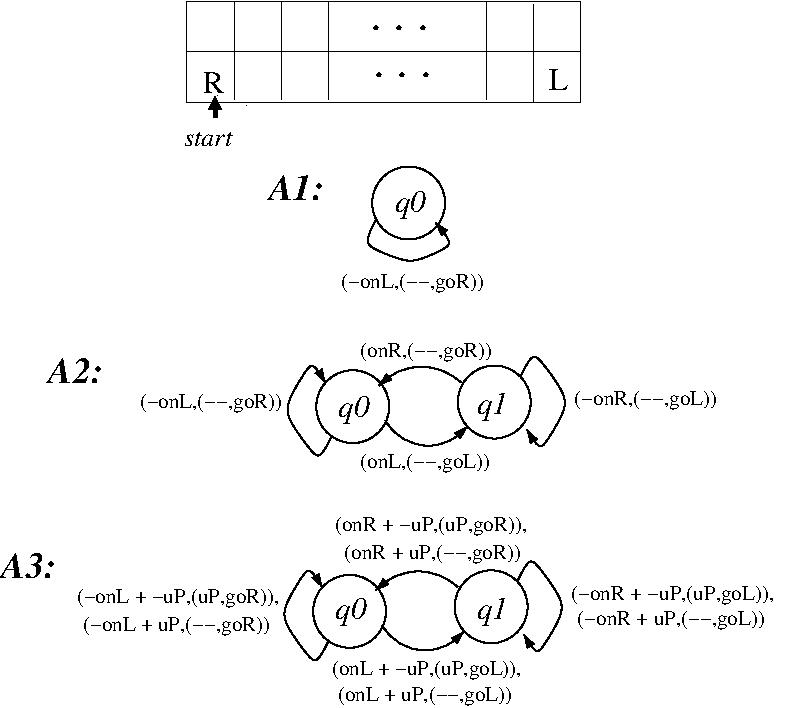

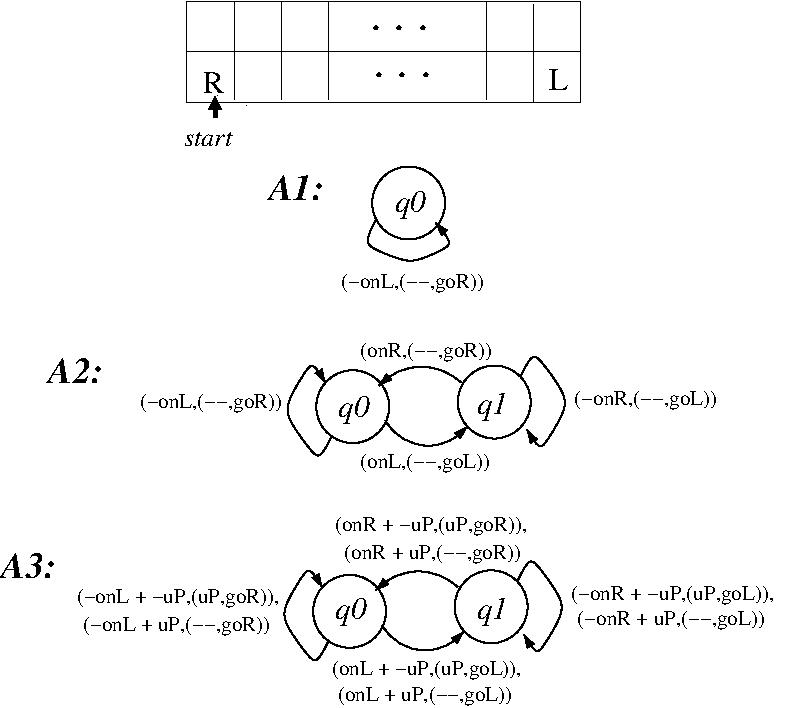

- EXAMPLE: Three deterministic finite-state agents that

operate in a 2D grid-world (Wareham and Vardy (2018)).

- State transitions are specified as (state, percept,

action, state) tuples, where an action is

specified as an (environment-change, agent move)

pair.

- Agent processing starts in state q0.

- What does each of these agents do?

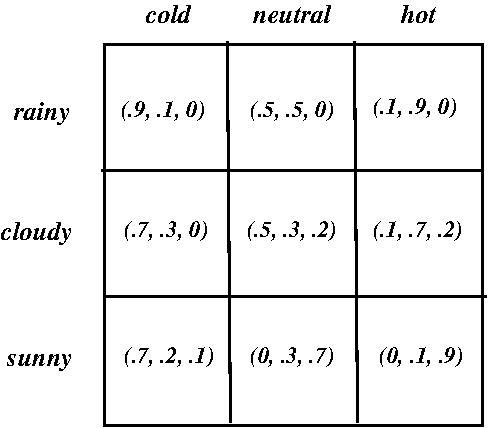

- EXAMPLE: A stochastic agent

- Specified as a table whose x- and y-axes are

percepts and table entries are probability-vectors

specifying the probabilities of a set of actions

relative to a set of x/y-percept combinations.

- Consider the following agent which specifies the

probabilities of

what to do (wear coat, carry umbrella, do nothing

extra) if you want to take a walk outside

depending on the weather ((rainy / cloudy / sunny)

x (cold / neutral / hot)):

- A rational agent does the best possible action

at each point wrt optimizing some pre-defined

performance measure; such an agent has an optimal

policy.

- Note that rationality is NOT omniscience, i.e.,

knowing the complete environment and the

outcomes of all (even random) actions.

- EXAMPLE: Going across street at crosswalk to

see friend vs not crossing street

because of incoming meteor from space.

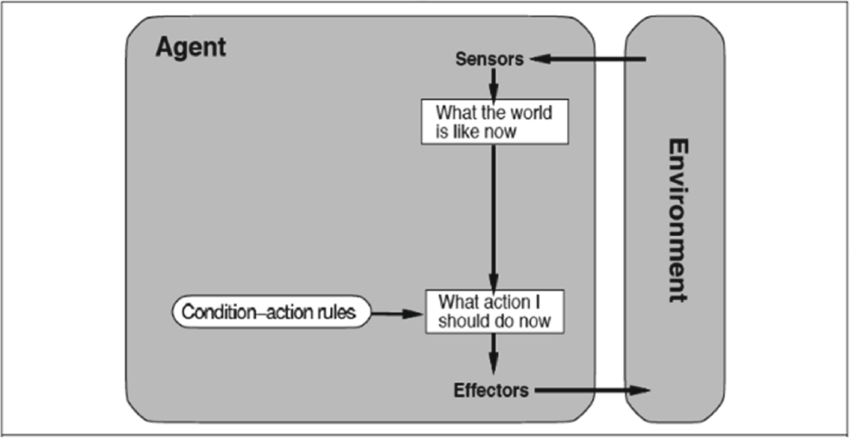

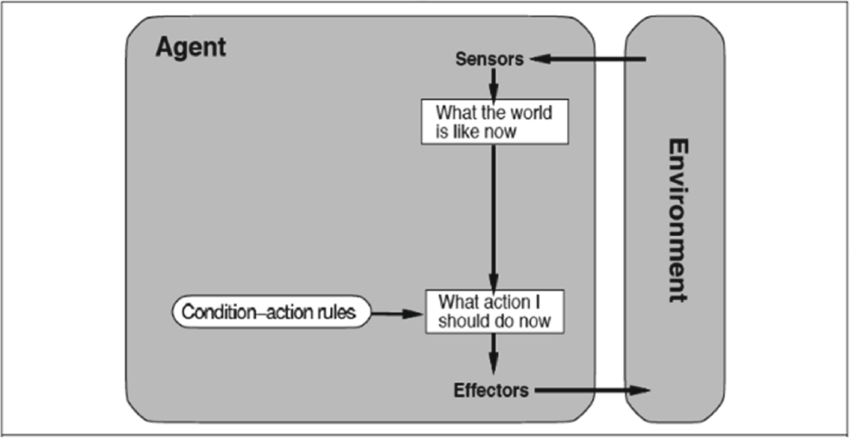

- Four basic types of agents:

- Simple reflex agents

- This simplest type of agent reacts without

understanding or planning by using

sets of encoded IF-THEN rules that

directly link percepts and actions, e.g.,

the deterministic finite-state and

stochastic agents given above.

- The intelligence encoded in such agents

can be impressive, e.g., Brooks' Genghis

robot, basic obstacle avoidance in

self-driving vehicles, but are ultimately

limited in that there is little understanding

or planning in the action-selection process.

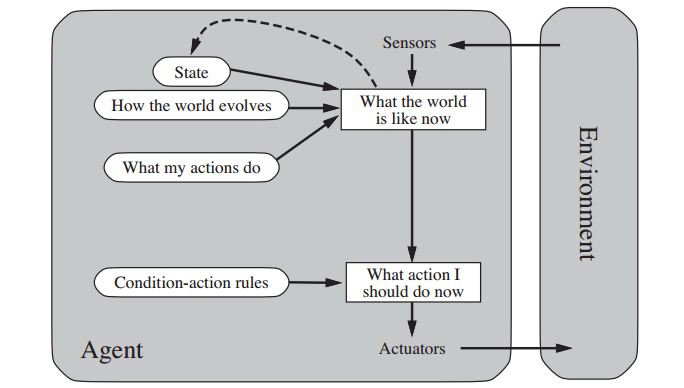

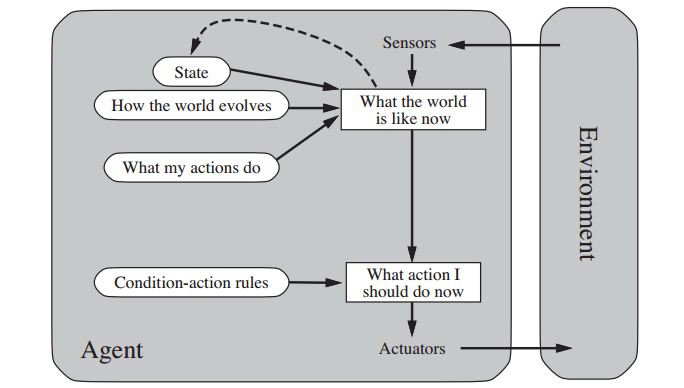

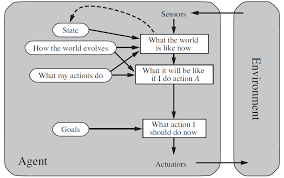

- Model-based agents

- This type of agent maintains a non-trivial

internal state that incorporates knowledge

of how the world works and a model of the

world encountered to date (including the

agent's own past actions in the world, e.g.,

the two-state deterministic finite-state

agent given above).

- The intelligence encoded in such agents now

allows understanding to be part of the

action-selection process.

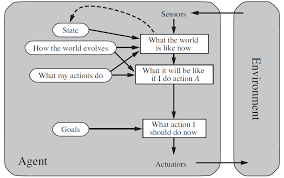

- Goal-based agents

- This type of agent incorporates a description

of an agent's wanted goal, e.g., the

destination to be reached, as well as a

way of evaluating if that goal has been

achieved, e.g., are we at the requested

destination?

- Typically more complex than simple reflex

agents but much more flexible in terms of

the intelligence it can encode (in particular,

planning as part of the action-selection

process).

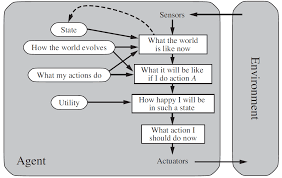

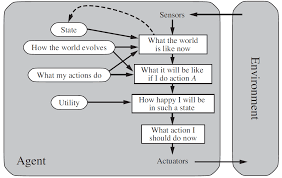

- Utility-based agents

- This type of agent incorporates a utility

function that internalizes some performance

measure, e.g., the distance traveled on

a particular path from the start point to

the destination, and is thus capable of

optimizing this performance measure, e.g.,

choosing the shortest path from a start

point to the destination.

- Such agents are more complex still but can

(given an appropriate utility function)

encode general rationality.

- Note that choosing the appropriate utility

function is crucial to the encoded degree

of rationality and this is typically

very hard to do.

- The intelligence in the four types of

agents above is hard-coded using hand-crafted

algorithms.

The most complex type of agent can learn from

experience and both become rational and

maintain this rationality in the face of changing

environments. The resulting agent programs

are machine-crafted and will be discussed in

the final lectures of this course.

Thursday, September 12 (Lecture #3)

[ DC3200, Lecture #2 and

DC3200, Lecture #3;

RN3, Chapters 2 and 3; Class Notes]

- Agents and Environments (Cont'd)

- Environments

- The current configuration of an environment is that

environment's current state.

- A configuration encodes all entities in an

environment, including the agents (and, if

applicable, the positions and internal states

of these agents) within the environment.

- EXAMPLE: A configuration of a Solitaire card

game would show all cards and their order,

both in the visible stacks of cards and the deck

of undrawn cards.

- What an agent perceives may not be a complete

representation of an environment's state;

however, such observations are all the agent

has to make its decisions.

- EXAMPLE: An agent playing a game of Solitaire can

only see the visible stacks of cards and their

orders in these stacks.

- Environments can be viewed as problems to which agents

provide solutions, e.g., a path between locations on a

map, a sequence of rule-applications that results in a

wanted goal.

- EXAMPLE: Some example agents:

- Roomba vacuum cleaner

- Human players in a game of chess

- Self-driving vehicle

- AI personal assistant

What are the environments, environment configurations,

and problems associated with each of these agents?

- Environments have associated state and action spaces.

- State space = the number of possible

configurations of the environment.

- Action space = the number of possible actions

relative to a state.

- Average / worst-case measures of the sizes of

state and action spaces are frequently used as

measures of environment complexity.

- Are good measures of the computational

complexity of searching state / action

spaces by exhaustive search.

- EXAMPLE: State space sizes for some games

- Tic-Tac-Toe: 10^4

- Chess: 10^{43}

- Go: 10^{172}

- EXAMPLE: Total # sequences of actions in some game

(game tree complexity)

- Tic-Tac-Toe: <= 10^5

- Chess: <= 10^{123}

- Go: <= 10^{360}

- Starcraft II: > 10^{1000)

- Given that there are approximately 10^{80} atoms

in the universe and these atoms are expected to

decay into a quark mist in 10^{70} years, the

opportunities for completing exhaustive searches

for the largest state spaces are severely limited;

that is why you need the smartest search algorithms

possible (some of which we will be looking at

over the next several lectures).

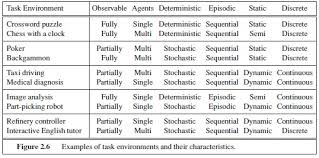

- Environments have several properties:

- Fully vs partially observable by agent, e.g.,

chess vs poker.

- Deterministic vs stochastic from viewpoint

of agent, e.g., chess vs poker.

- Episodic vs sequential, i.e., next episode does not

or does depend on previous episodes, e.g., OCR

(Optical Character Recognition) vs chess.

- Static vs dynamic, i.e., environment changes

discretely when agents act or continuously

(possibly even during agent decision), e.g.,

turn-based (chess) vs real-time (Starcraft) games.

- Discrete vs continuous conception of time and

environment change, e.g., poker /chess vs

self-driving vehicles.

- Single agent vs multiple agents, e.g., Solitaire

vs chess vs self-driving vehicles.

- Complete vs incomplete knowledge of actions and

their consequences, e.g., chess vs real life.

- Example environments and their properties:

- EXAMPLE: Some example agents:

- Roomba vacuum cleaner

- Human players in a game of chess

- Self-driving vehicle

- AI personal assistant

What are the properties of the environments associated

with each of these agents?

- The properties of an environment dramatically affect

the computational difficulties of designing agents for

and operating agents within environments; we will see

this in action over the course of our lectures, many

of which will consider only the simplest environments..

- Potential exam questions

- Problem-solving and search

- Problem-solving agents are rational agents that act in

environments to solve the problems posed by those

environments.

- In the simplest case, they are goal-based agents

that find a sequence of actions that satisfies

some goal, e.g., find a path from a start point to

a given destination.

- More complex cases involve utility-based agents

that find a goal-achieving sequence of actions

that optimize some performance measure, e.g., find

a shortest path (in terms of distance

traveled) from a start point to a specified

destination.

- EXAMPLE: Finding a (shortest) path from our

classroom at MUN to the Winners store at the

Avalon Mall.

- A solution (also known as a solution path) is

a sequence of actions that terminates in the

problem's goal.

- A well-defined problem consists of:

- An initial state that the agent starts in.

- The actions possible from each state.

- Specified as a successor function, e.g.,

from position (x,y) in a 2D grid-based maze,

you can go up, down, left, or right.

- This is typically refined relative to each

state to the viable actions for that state,

i.e., the actions that do not violate

constraints like moving into a wall in a

2D maze.

- The set of goal states

- Specified as a goal test function that returns

"Yes" if a given state is in the set of goal

states and "No" otherwise, i.e., are we

just outside the main door of the Winners

store at the Avalon Mall? Is our chess

opponent in checkmate?

- Action cost function

- Most problems assume positive non-zero

action costs.

- The cost of a solution is the sum of the costs

of the actions in that solution's associated path;

an optimal solution has the lowest cost over all

possible solutions.

- EXAMPLE: Shortest path between two vertices in an

edge-weighted graph G.

- Problem definition:

- Start vertex in G.

- For each vertex v in G, the set of edges

with v as an endpoint.

- Destination vertex in G.

- Edge-weight function for G.

- The problem objective is to find a shortest (in

terms of summed edge-weights) path in G from the

start vertex to the destination vertex.

- EXAMPLE: Shortest path between two locations in a

2D grid G incorporating obstacles.

- Problem definition:

- Start location in G.

- For each location l in G that is not part of an

obstacle, the subset of possible moves

{up, down, left, right} that do not land on

some obstacle when executed at l.

- Destination location in G.

- Function that assigns cost 1 to up, down,

left, and right moves.

- The problem objective is to find a shortest (in

terms of # of moves) path in G from the

start location to the destination location.

- EXAMPLE: Most efficient plan for making a cup of tea.

- Problem definition:

- State consisting of {cup(empty), teaBag,

kettle(empty), faucet, electricity}.

- The set A of actions, i.e.,

- kettle(empty) + faucet => -kettle(empty) +

kettle(fullCold)

[fill kettle with cold water]

- cup(empty) + faucet => -cup(empty) +

cup(fullCold)

[fill cup with cold water]

- kettle(fullCold) + electricity =>

-kettle(fullCold) + kettle(fullHot)

[boil kettle]

- cup(fullCold) + electricity =>

-cup(fullCold) + cup(fullHot)

[boil cup]

- cup(empty) + teaBag => -teaBag +

-cup(empty) + cup(teaBag)

[add tea bag to cup]

- cup(empty) + kettle(fullHot) =>

-cup(empty) + cup(fullHot)

[add hot water to cup]

- cup(teaBag) + kettle(fullHot) =>

-cup(teaBag) + cup(tea)

[make tea I]

- cup(fullHot) + teaBag =>

-cup(fullHot) + -teaBag + cup(tea)

[make tea II]

Each action X => Y (where X is the cause

and Y is the consequence) is an IF-THEN

rule whose application to a state s is viable

if for each x (-x) in X, x is (not) in s and

results in a state s' that modifies s by

adding (removing) each y to (from) s such that

y (-y) is in Y, e.g., the initial state is

modified to state s' = {cup(teaBag),

kettle(empty), faucet, electricity} by the

application of action 5.

Given the above, for each possible state s

that is a subset of {faucet, electricity,

kettle(empty), kettle(fullCold),

kettle(fullHot), teaBag, cup(empty),

cup(fullCold), cup(fullHot), cup(teaBag),

cup(tea)}, the set of viable actions for s is the

subset of A that can be viably applied to s.

- Any state including {cup(tea)}.

- Function that assigns cost 1 to each action.

- The problem objective is to find a most efficient

(in terms of # of actions) plan that, starting in

the initial state, creates a hot cup of tea.

- The formalism above is a simplified version of

the input language for the STRIPS (STanford

Research Institute Problem Solver) automated

planning system (Fikes and Nilsson (1971); see

also Bylander (1994)) and will be used in Assignment #2.

- The type of state in this problem has what is

known as a factored representation, i.e.,

a state with a

multi-component internal structure; we will be

using this type of problem-state frequently

later in the course.

Tuesday, September 17 (Lecture #4)

[DC3200, Lecture #3;

RN3, Chapter 3; Class Notes]

- Problem-solving and search (Cont'd)

- Search is the process of exploring a problem's search

space to find a solution. This process generates a

search tree in which the root node, edges, and

non-root nodes correspond to the initial problem

state, actions, and possible solution paths.

- Starting with the root node, the search tree is

generated by expanding nodes, i.e., generating

the nodes reachable from a node n by viable actions,

in some specified manner until either a goal

node is found or it is established that no

goal-state is reachable by any sequence of actions

starting at the root node.

- At each point in this process, the list of

leaf nodes that has been generated but

not yet expanded is known as the fringe.

- Search algorithms can be distinguished in

how they select nodes from the fringe to

expand.

- Each search tree node corresponds to problem

state; however, if actions are reversible,

a problem state can be associated with multiple

search tree nodes and a finite-size problem state

space can have an infinite-size search tree.

- EXAMPLE: Search tree for problem of finding a

shortest path between two vertices in a graph.

[DC3200, Lecture #3, Slides 14-26

(PDF)]

- EXAMPLE: Search tree for 15 puzzle

[DC3200, Lecture #3, Slides 27-28

(PDF)]

- Must distinguish problem-states and search-tree

nodes - the former are configurations of the

problem environment and the latter are bookkeeping

data structures allowing recovery of solution

paths to goals. To this send, a search tree node

n typically contains the following data:

- The problem state associated with n.

- A pointer to n's parent node, i.e., the node

whose expansion generated n.

- The action by which n was generated from its

parent node.

- The cost of the sequence of actions on the

path in the search tree from the root node

to n.

- This is traditionally denoted by g(n).

- The depth of n, i.e., the number of actions on

the path in the search tree from the root node

to n.

Additional data may also be stored in the node

depending on the search algorithm used.

- ALGORITHM: General uninformed tree search

1. Function Tree-Search(problem, strategy)

2. fringe = {Node(problem.initial_state)}

3. while (true)

4. if (fringe.empty) return fail

5. node = strategy.select_node(fringe)

6. if (node.state is goal) return solution

7. else fringe.add(Expand(node, problem))

- ALGORITHM: Node expansion [ASSIGNMENT #1]

1. Function Expand(node, problem)

2. successors = {}

3. for a in viablActions(problem.actions, node)

4. s = Node(node.state.do_action(a))

5. s.parent = node

6. s.action = a

7. s.g = node.g + s.action.cost

8. s.depth = node.depth + 1

9. successors.add(s)

10. return succesors

- We will build on these algorithms below when we

consider different search strategies.

Search strategies

- Measures for assessing the problem-solving performance

of a search-strategy algorithm s:

- Completeness, i.e., is s guaranteed

to find a solution if it exists?

- Optimality, i.e., is s guaranteed

to find an optimal solution?

- Time complexity, i.e., how long does it take for

s to find a solution or establish that a solution

does not exist?

- Measures the total number of search tree nodes

generated.

- Space complexity, i.e., how much memory is needed

to execute s?

- Measures the maximum number of search tree

nodes that must be stored at any point in the

search process.

Aspects of the search tree typically used to derive

time and space complexity functions are the branching

factor b (the maximum number of successor nodes for

any tree node) and the depth d (of the shallowest

goal node, if a solution exists, and the maximum depth

of a generated node, if one does not). Note that the

size of a search tree is upper-bounded by b^{d+1}.

- Two categories of search strategies:

- Uninformed (blind) search

- Has no extra information about states in a

search tree beyond that in the problem

description, i.e., algorithm operations

limited to successor, generation, and

goal test.

- Informed search

- Has extra information which allows

guesses about which states are more

likely to be on useful / optimal

solution paths, i.e., algorithm operations

additionally include the value of heuristic

node evaluation function h(n).

- Hopefully leads to faster search.

We will look first at uninformed search strategies

and then move on to informed search strategies, with

an intermediate stop to consider closed lists, a

key algorithmic technique for optimizing the

performance of both types of search strategies.

Uninformed (blind) search strategies

- Breadth-First Search (BFS)

Lines modified from the algorithm for general

uninformed tree search are indicated by "<=".

- EXAMPLE: BFS for a shortest (# edges) path between

vertices A and C in an unweighted graph.

[DC3200, Lecture #3, Slides 44-48

(PDF)]

- EXAMPLE: BFS for a shortest (Manhattan distance) path between two

locations in a 2D grid with obstacles

[DC3200, Lecture #3, Slides 49-54

(PDF)]

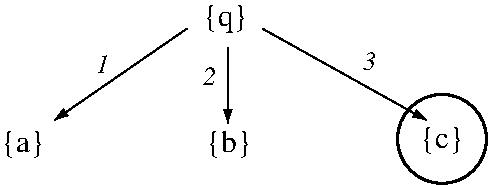

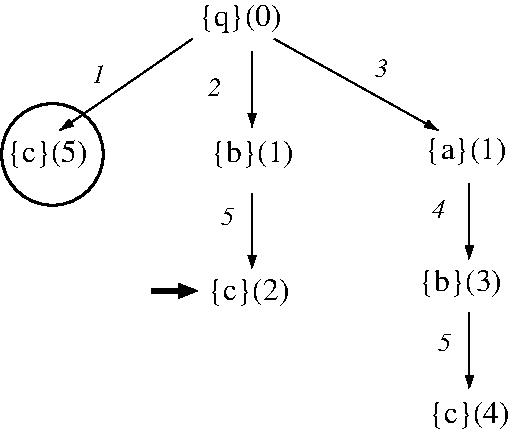

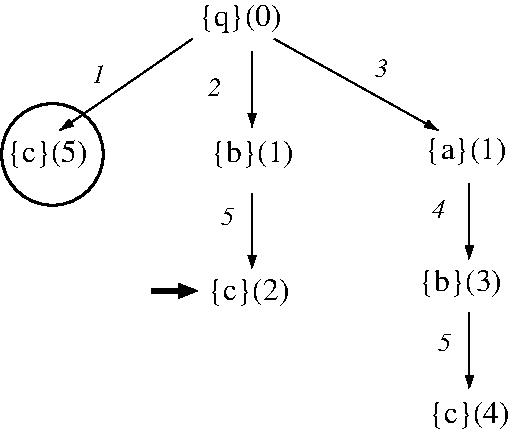

- EXAMPLE: BFS for a shortest (summed

action-cost) solution to a modified

STRIPS planning problem based on facts

[q, a, b, c], initial state [q], goal state

[c], and actions

- q => -q + a (cost 1)

- q => -q + b (cost 1)

- q => -q + c (cost 1)

- a => -a + b (cost 1)

- b => -b + c (cost 1)

- Performance of BFS

- BFS is complete.

- BFS can explore the entire search tree;

hence, if there is a goal node

in the tree, BFS will find it.

- Note that if there are multiple

goal nodes in the search tree,

BFS will find the shalowest one.

- BFS is not optimal in general.

- This is because the path to the

shallowest goal node need not

have the optimal cost.

- EXAMPLE: BFS is not optimal for finding a

shortest (summed edge-weights) path between vertices A and C in an

edge-weighted graph.

[DC3200, Lecture #3, Slide 57

(PDF)]

- EXAMPLE: BFS is not optimal in finding a

shortest (summed action-cost) solution to a modified

STRIPS planning problem based on facts

[q, a, b, c], initial state [q], goal state

[c], and actions

- q => -q + c (cost 5)

- q => -q + b (cost 1)

- q => -q + a (cost 1)

- a => -a + b (cost 2)

- b => -b + c (cost 1)

- BFS is optimal if (1) path costs

never decrease as depth increases

or (2) all action costs are the

same.

- Time Complexity: O(b^{d+1}), as all nodes

in a search tree of depth d with

branching factor b may be generated.

- Space Complexity: O(b^{d+1}), as all

generated nodes must be stored in

memory to allow reconstruction of

solution paths from goal nodes via

parent pointers.

Uniform Cost Search (UCS)

Lines modified from the algorithm for general

uninformed tree search are indicated by "<=".

EXAMPLE: UCS for a

shortest (summededge-weights) path between vertices A and C in an

edge-weighted graph.

[DC3200, Lecture #3, Slide 57

(PDF)]

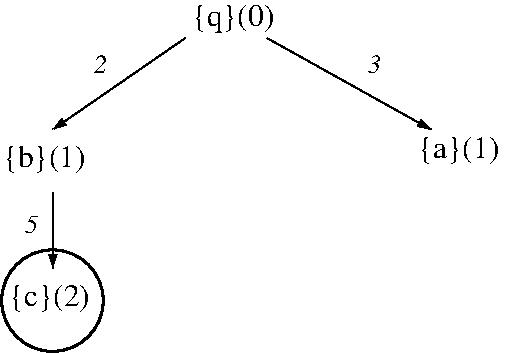

EXAMPLE: UCS for a shortest (summed

action-cost) solution to a modified

STRIPS planning problem based on facts

[q, a, b, c], initial state [q], goal state

[c], and actions

- q => -q + c (cost 5)

- q => -q + b (cost 1)

- q => -q + a (cost 1)

- a => -a + b (cost 2)

- b => -b + c (cost 1)

Performance of UCS

- UCS is complete

- UCS is optimal if action costs > 0 (epsilon)

- Time Complexity: O(b^{1 +

floor(C*/epsilon)}), where C* is the

cost of an optimal solution

- This is necessary because path

cost is no longer linked to

depth.

- Can be much bigger than b^{d+1} is the

worst case.

- Space Complexity: O(b^{1 +

floor(C*/epsilon)})

Thursday, September 19 (Lecture #5)

[DC3200, Lecture #3;

RN3, Chapter 3; Class Notes]

- Problem-solving and Search (Cont'd)

- Uninformed (blind) search strategies (Cont'd)

- Depth-First Search (DFS)

Lines modified from the algorithm for general

uninformed tree search are indicated by "<=".

- ALGORITHM: DFS (recursive)

1. Function DFS(node, problem)

2. if (node.state is goal) return solution

3. successors = Expand(node, problem)

4. for (s in successors):

5. DFS(s, problem)

Call DFS(root_node, problm)

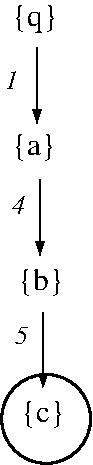

- EXAMPLE: DFS for a solution to a modified

STRIPS planning problem based on facts

[q, a, b, c], initial state [q], goal state

[c], and actions

- q => -q + c (cost 1)

- q => -q + b (cost 1)

- q => -q + a (cost 1)

- a => -a + b (cost 1)

- b => -b + c (cost 1)

- Performance of DFS

- DFS is not complete in general

- Can enter infinite loop if does

not keep track of already-visited

states.

- EXAMPLE: DFS for a

path between vertices A and B in an

unweighted graph.

[DC3200, Lecture #3, Slide 57

(PDF)]

- Infinite loops can be prevented

by algorithm

enhancements (see DLS and ID-DFS

below) or by the

use of closed lists (see below).

- DFS is not optimal in general

- This is because it returns the

first goal found.

- EXAMPLE: If B is visited before D, DFS is

not optimal for finding a shortest (summed

edge-weight) path between vertices A and C

in an

edge-weighted graph.

[DC3200, Lecture #3, Slide 57

(PDF)]

- EXAMPLE: DFS is not optimal for

finding a shortest (either # moves

or summed action-cost) solution to

the modified STRIPS planning

problem given above.

- Time Complexity: O(b^m), where m is

is the depth of the deepest node.

- Space Complexity: O(bm)

- This is because in the worst case,

DFS stores a path of m nodes from

the route along with the b

successors of each node on this

path.

- Depth-Limited Search (DLS)

- Solves the infinite-loop DFS problem by

limiting the maximum search depth to some

L > 0.

- ALGORITHM: DLS (recursive)

1. Function DLS(node, L, problem)

2. if (node,depth > L) return "cutoff" <=

3. if (node.state is goal) return solution

4. successors = Expand(node, problem)

5. for (s in successors):

6. DLS(s, L, problem)

Call DLS(root_node, L, problm)

Lines modified from the algorithm for DFS

(recursive) are indicated by "<=".

- Performance of DLS

- DLS is not complete

when solution depth > L

- DLS is not optimal in general

- This is because it returns the

first goal found.

- Time Complexity: O(b^L)

- Space Complexity: O(bL)

Iterative Deepening Depth-First Search (ID-DFS)

- In DLS, one chooses maximum search depth L

by analysis of the problem's state space;

alternatively, one can gradually increase

the depth limit until a solution is found.

This is what ID-DFS does.

- ALGORITHM: ID-DFS

1. Function ID-DFS(node, , problem)

2. for d in (1,infty)

3. result = DLS(node, d, problem)

4. if (result != "cutoff") return result

Performance of ID-DFS

- ID-DFS is complete

- ID-DFS is optimal under same conditions

as BFS

- Time Complexity: O(b^{d+1}), where d is the

depth of the shallowest goal node

- As nodes may be recomputed as

L is increased, may seem wasteful;

however, in practice, generates

about twice as many nodes as BFS.

- Space Complexity: O(bd)

As it combines the advantages of both BFS and

DFS, ID-DFS is in general preferable to both

BFS and DFS.

TABLE: Uninformed search strategy comparison

[DC3200, Lecture #3, Slide 79

(PDF)]

INTERLUDE: A look at the mechanics of

Assignment #1.

- How do you modify the BFS, UCS, and DFS

(non-recursive) algorithms above to

print all solutions to a given

problem?

None of the algorithms presented above remember the

states that have already been expanded. Such states

lead to duplicated effort and (if actions are

reversible) infinite loops.

Such states can be remembered and avoided using

closed lists, which are lists of previously expanded

(and hence explored) nodes.

- To ensure efficient lookup, closed lists are often

implemented using dictionaries and hash tables

(the latter of which will be described in detail

in several lectures).

In addition to eliminating infinite loops, such

lists can lead to an exponential savings in the

number of nodes generated; however, these lists

are not universal cures for all of the ills that

can plague search

(see below).

Following terminology in Russell and Norving, tree

search algorithms that use closed lists (1) rename

fringe lists as open lists and, perhaps more

confusingly, (2) are renamed graph search algorithms

(after the classic BFS graph search algorithm that

implements closed lists implicitly using a node

coloring scheme to avoid re-exploring

previously-encountered vertices).

ALGORITHM: General uninformed graph-based search

1. Function Graph-Search(problem, strategy)

2. closed = {} <=

3. open = {Node(problem.initial_state)}

4. while (true)

5. if (fringe.empty) return fail

6. node = strategy.select_node(open)

7. if (node.state is goal) return solution

8. if (node.state in closed) continue <=

9. closed.add(node.state) <=

10. open.add(Expand(node, problem))

Lines modified from the algorithm for general

uninformed tree search are indicated by "<=".

ALGORITHM: Graph-based BFS

1. Function gbBFS(problem)

2. closed = {} <=

3. open = Queue{Node(problem.initial_state)}

4. while (true)

5. if (fringe.empty) return fail

6. node = open.pop()

7. if (node.state is goal) return solution

8. if (node.state in closed) continue <=

9. closed.add(node.state) <=

10. open.add(Expand(node, problem))

Lines modified from the algorithm for (tree-based) BFS

are indicated by "<=".

ALGORITHM: Graph-based DFS

1. Function gbDFS(problem)

2. closed = {} <=

3. open = Stack{Node(problem.initial_state)}

4. while (true)

5. if (fringe.empty) return fail

6. node = open.pop()

7. if (node.state is goal) return solution

8. if (node.state in closed) continue <=

9. closed.add(node.state) <=

10. open.add(Expand(node, problem))

Lines modified from the algorithm for (tree-based) DFS

are indicated by "<=".

Graph-based search algorithms still generate search

trees; however, as each problem state can now only

label only one search tree node, the search tree is

effectively overlaid on the state-space graph whose

vertices correspond to problem states and arcs

correspond to actions linking pairs of states.

- Problem states may repeat in the fringe data

structure, as states may be encountered several

times before they are expanded and added to the

closed list.

EXAMPLE: Operation of gbBFS in finding

a shortest (# edges) path between vertices A and C in

a graph. [txt]

EXAMPLE: Operation of gbDFS; in finding a path between

vertices A and C in a graph.

[txt]

Performance of a graph-based version of search

strategy s

- Completeness is as in the tree-based version of s.

- Optimality depends on s.

- As graph-based search effectively discards

all paths to a node but the one found first,

it is only optimal if we can gaurantee that

the first solution found is the optimal

one, e.g., BFS or UCS with all actions having

the same cost.

- Time Complexity: O(#states)

- This is because each state is expanded

at most once and in the worst case,

every state in the environment must be

expanded; hence, it is the same for

every search strategy.

- In practice, the actual number of generated

states is far lower than this worst case.

- Space Complexity: O(#states)

- This is because of the closed list.

- Note that this also applies to tree-based

versions that ran in linear space, e.g., DFS

and its associated variants.

Tuesday, September 24 (Lecture #6)

[DC3200, Lecture #5;

RN3, Chapter 3; Class Notes]

- Problem Solving and Search (Cont'd)

- Informed (heuristic) search strategies

- Uses problem-specific knowledge encoded as

heuristic functions that (ideally, but not

always) cut down the total number of nodes

searched and speed up search times to goal.

- Heuristic function h(n) gives a (hopefully

good) estimate of the cost of the optimal

path from node n to a goal node. If n is a

goal node, h(n) = 0.

- h*(n) = perfect heuristic which gives exact

cost of optimal path from n to goal node

(and wouldn't that be nice ...)

- EXAMPLE: A heuristic h1(n) for shortest-path in

edge-weighted graphs is the cost of the

lowest-cost edge

originating at n that does not double back to

an already-explored node.

- EXAMPLE: A heuristic h2(n) for shortest-path in 2D

grids is the Manhattan distance from n to the

the requested location.

- EXAMPLE: A heuristic h3(n) for efficient plans to

make tea is the modified Hamming distiance from

the problem state n to a possible goal state.

- Replace all non-specified items in the goal

by * to generate pg and then compute the

Hamming distance between the non-star

positions in pg and the state encoded in n.

- For example, given a goal p1 + -p3 + p4 in

a five-item state, pg = 1*01* and the

modified Hamming distance from pg to

sn = 01000 is 2 (position 3 matches and

positions 1 and 4 do not match).

- Types of heuristics

- An admissible heuristic never

overestimates the distance from a node

n to a goal node, i.e., h(n) <= h*(n ).

- Can be seeen as an optimistic guess.

- When h() is admissible, f(n) = g(n) +

h(n) never overestimates the cost

of a best-possible path from the initial

state to a goal state that goes

through n.

- A consistent heuristic (also called a

monotone heuristic) has

|h(a) - h(b)| <= c(a,b), where c(a,b) is the

optimal cost of a path from a to b.

- With such a heuristic, the difference of

the heuristic values of two nodes must

be less than or equal to the optimal

cost of a path between those nodes.

- Every consistent heuristic is also

admissible; indeed, it is hard (but not

impossible) to construct an admissible

heuristic that is not consistent.

- When h(n) is consistent, the values

of f(n) = g(n) + h(n) along any

path are non-decreasing; this will turn

out to be very useful later in our

story.

- EXAMPLE: Which of the heuristics h1(n), h2(n),

and h3(n) above are admissible? Which are

also consistent?

- Informed search strategies differ in how they

combine g(n) and h(n) to create a function

f(n) that is used to select the next node

in the open list to expand.

- Tree-based Greedy Best-First BFS (GBeFS)

- Select node in open list with

lowest f(n) value, where f(n) = h(n),

i.e., only cares about distance to goal.

- Resembles DFS in that it tries a single

path all the way to the goal and backs

up when it hits a "dead end".

- EXAMPLE: BFS vs GBeFS in shortest-path in 2D

grid world with obstacles.

[DC3200, Lecture #5, Slides 19-33

(PDF)]

- ALGORITHM: Tree-based GBeFS

1. Function GBeFS(problem, h(n))

2. open = PriorityQueue{f(n) = h(n)} <=

3, open.add(Node(problem.initial_state))

f. while (true)

5. if (open.empty) return fail

6. node = open.min_f_value() <=

7. if (node.state is goal) return solution

8. else open.add(Expand(node, problem))

Lines modified from the algorithm for UCS

are indicated by "<=".

- Performance of GBeFS (similar to DFS)

- GBeFS is not complete

- May not find goal; may even "get

lost" and retrace paths if

heuristic is bad.

- GBeFS is not optimal

- Time Complexity: O(b^m), where m =

maximum explored node depth.

- Space Complexity: O(bm)

- Though performance not so great in general,

performs well for carefully crafted

heuristics; such heuristics can be derived

using means-ends analysis.

- GBeFS was the core search strategy underlying

the earliest automated reasoning systems

Logic Theorist (1956) (Newell and Simon

(1956)) and GPS (General Problem Solver

(1959) (Newell, Shaw, and Simon (1959)) as

well as foundational computational modeling

work on problem solving by humans

(Newell and Simon (1972)).

- Problem Solving and Search (Cont'd)

- Informed (heuristic) search strategies (Cont'd)

- Tree-based A* search

- Most well-known BeFS algorithm.

- Select node in open list with

lowest f(n) value, where f(n) = g(n) + h(n),

i.e., cares about total distance from

start state to goal.

- ALGORITHM: Tree-based A* search

1. Function A*(problem, h(n))

2. open = PriorityQueue{f(n) = g(n) + h(n)} <=

3, open.add(Node(problem.initial_state))

f. while (true)

5. if (open.empty) return fail

6. node = open.min_f_value() <=

7. if (node.state is goal) return solution

8. else open.add(Expand(node, problem))

Lines modified from the algorithm for UCS

are indicated by "<=".

- Performance of Tree-based A*

- Tree-based A* is complete

- Will eventually visit all nodes

in the search tree, starting with

those who h-values are lowest.

- Tree-based A* is optimal if h(n) is admissible

- Time Complexity: O(b^m), where m =

maximum explored node depth.

- Space Complexity: O(bm)

- Graph-based A* search

- Let us now try to optimize the runtime of

A* search by using closed lists.

- ALGORITHM: Graph-based A* search

1. Function gbA*(problem, h(n))

2. closed = {} <=

3. open = PriorityQueue{f(n) = g(n) + h(n)}

4, open.add(Node(problem.initial_state))

5. while (true)

6. if (open.empty) return fail

7. node = open.min_f_value()

8. if (node.state is goal) return solution

9. if (node.state in closed) continue <=

10. closed.add(note.state) <=

11. open.add(Expand(node, problem))

Lines modified from the algorithm for

tree-based A* search are indicated by "<=".

- Performance of Graph-based A*

- Graph-based A* is complete

- Graph-based A* is optimal if h(n) is consistent

- Admissible heuristics do not

suffice for optimality, as

"raw" graph-based search only

retains the first-encountered path

to a repeated state (where a

later-encountered path may be

better).

- If a consistent heuristic is used,

the values of f(n) along any path

are non-decreasing; as the

sequence of nodes expanded by

graph-based A* is in non-decreasing

order of f(n), any node selected

for expansion is on an optimal

path as all later nodes are at

least as expensive!

- Time Complexity: O(b^m)

- Space Complexity: O(b^m)

- EXAMPLE: (VERY detailed) Graph-based A* search

for path between two locations in a 2D grid

with obstacles

[DC3200, Lecture #5, Slides 68-147

(PDF)]

- Graph-based A* also has the property that

no other optimal uninformed search algorithm

can expand fewer nodes; that being said, the

number of nodes that need to be searched and

retained (as part of the closed list) is

still exponential in the worst case and can

be prohibitive in practice.

Potential exam questions

There are a variety of ways of improving the performance in

practice (though not in the worst case) of the search

algorithms above; these ways tend to be specific to

particular types of environments, e.g., 2D grids

(see DC3200, Lecture #7), or search algorithms, e.g.,

graph-based A* search (see DC3200, Lecture #5).

An important example of the latter is using appropriate data

structures to optimize membership query time in open and

closed lists in graph-based search. Some common data

structures and their worst-case membership query times for

an open / closed list with n items are:

- List /array: O(n)

- Set / dictionary (via balanced search trees): O(log n)

- Hash table: O(n)

- Perfect hash table: O(1)

Given their simplicity and the possibility of O(1) membership

query time, it is worth looking at hash tables and the

manners in which they can be made as perfect as possible.

Hash Tables: Overview and Implementation

- A hash table is at its heart a 1D array that stores

instances of more complex data structures for easy

lookup.

- A commonly-stored data structure is key-value

pairs.

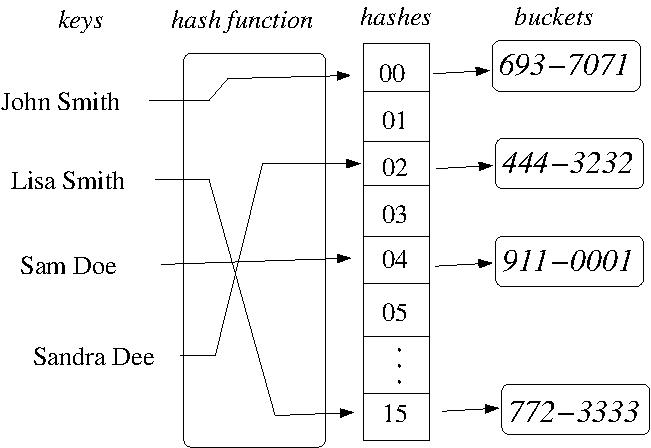

- EXAMPLE: A hash table for person name / phone number

pairs with 16 buckets.

- Given a data structure d, a hash function is used to

compute an integer that is d's (initial) position in the

hash table (also called d's bucket). Such functions are

made as simple as possible to optimize membership query

time.

- As keys are typically less

complex than their associated values, the hash

function is applied to the key and the full

key / value pair is stored in the bucket.

- In a perfect hash table (see example above), each data

structure maps to a

unique position in the hash table (giving O(1)

membership query time if the hash function is simple

enough).

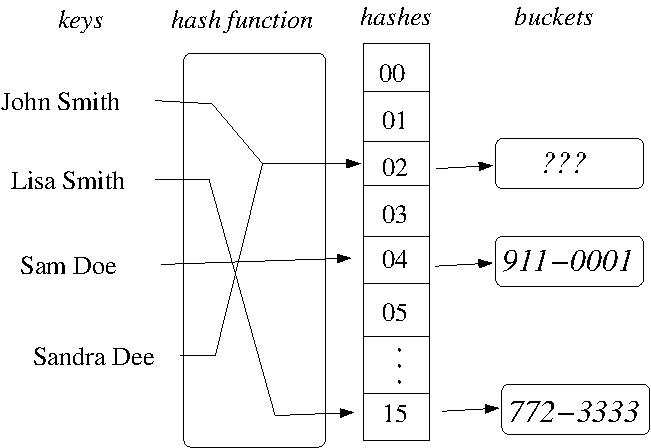

- If two or more data structure map to the same

position, i.e., a hash collision has occured, an

appropriate collision resolution policy is used to

redistribute the colliding items in the hash table such

that membership query time is degraded as little as

possible.

- EXAMPLE: A hash collision in a hash table for

person name / phone number pairs with 16 buckets.

Hash functions

- Have many uses in addition to hash tables, e.g.,

password protection, data verification (checksum

creation), proximity queries in computational geometry

(geometric hashing).

- Hash functions typically have an associated input

format and range of input values; if a hash function is

applied to an unexpected value or a value outside the

given range, the results may be poor or undefined.

- EXAMPLE: Hashing a character string to an integer.

[DC3200, Lecture #6, Slide 18

(PDF)]

- Output ranges, i.e., the available storage indices in a

hash table, can be ensured by the modulus operator.

- Hash function properties

- There a variety of properties a hash function

can have, whose necesssity depends on the

application, e.g.,

- Determinism (A good hash function should

always produce the same output for

the same input)

- Uniformity (a good hash function maps inputs as

evenly as possible over its output range to

prevent hash collisions)

- Data Normalization (Ignores irrelevant features

of the input, e.g., upper / lower case

letters; is application-dependent, e.g.,

data lookup (good) vs password protection

(bad)).

- Continuity (Small changes in the input have

small changes in the output; is

application-dependent, e.g., geometric

hashing (good) vs password protection

(bad))

- Reversibility / invertibility (input can be

easily recovered from the output; is

application-dependent, e.g., geometric

hashing (good) vs password protection

(bad))

- One can also characterize hash functions in terms

of purely mathematical properties, e.g.,

- Injective (Every input maps to a unique

output, i.e., the function is one-to-one)

- Note that some outputs may not be mapped.

- (Many) Injective functions are

invertible.

- Such functions are perfect hash

functions!

- Surjective (Every output has at least input

that maps to it, i.e., the function is onto)

- (Many) Surjective functions are not

invertible.

- Bijective (Every input maps to a unique output

and every output maps to a unique input,

i.e., the function is one-to-one and onto)

- Such functions are minimal perfect

hash functions, i.e., they are perfect

hash functions with the

smallest-possible associated hash

tables!

- EXAMPLE: Diagrams of injective, surjective and

bijective functions

[DC3200, Lecture #6, Slide 40

(PDF)]

- Hash function algorithms

- Simple hash functions

- Trivial hashing: When (typically small) data

can be directly translated to its hash

value, e.g., h(ASCII character) = ASCII

character code.

- Modulus hashing: h(x) = x mod n

- Only applicable to integers

- Focuses on lower-order bits in given

integer; truncates higher-order bits.

- Can have uniformity problems if x is not

a multiple of n.

- Binning hashing: h(x) = x / n

- Only applicable to integers

- Focuses on higher-order bits in given

integer; truncates lower-order bits.

- Uniformity characteristics almost the

opposite of modulus hashing.

- String hashing

- Character summation hashing: Sum the ASCII /

Unicode values of the characters in the

string.

- Block summation hashing: Treat each succesive

block of n characters as a single long

integer whose bits are the concatenated

ASCII / Unicode codes for those characters

and sum the long integers corresponding to

all character-blocks.

- EXAMPLE: What are the characteristics of

both of these schemes wrt -jectivity,

uniformity, invertibility, and input

character order?

- Geometric hashing

- Converts n-dimensional location input

(x1, x2, ..., xn) into an integer.

- For a 2D grid with width wd and height ht,

n(x,y) = (y * wd) + x; note that this

h() is a minimal perfect hash function

whose output i is invertible (y = i / wd,

x = i % wd).

Hash collisions: Overview and Resolution Policies

- Collision resolution is expensive and should be

avoided where possible.

- Perfect (injective) hash functions ensure that there

are no collisions; however, this requires that the

input space is smaller than the hash table, which

is seldom the case -- indeed, the input space is

typically MUCH larger than the hash table.

- If one is forced (as one typically is) to use imperfect

hash functions, the probability of a collision

rises surprisingly fast with each new hash.

- The probability of a hash collition after n hashes

with a uniform hash function into a hash table

with m buckets is 50% when n is approximately

sqrt(m).

- EXAMPLE: The Birthday Paradox

- The probability of two people having the

same birthday is 50% when there are only

23 people!

- This is effectively the probability of a

hash colision when hashing n = 23 (birthday /

person name) pairs into a hash table with

m = 365 buckets.

- EXAMPLE: The probability of a hash collision after

n = 70,000 (250,000) hashes into a hash

table indexed by 32-bit integers, i.e.,

m = 2^{32}, is 50% (99%).

- Another way of quantifying this degredation of hash

table performance is the load factor = n/m, with

performance decreasing (due to hash collisions) as

load factor increases.

- There a variety of hash collision resolution policies,

e.g.,

- Overwiting

- If a bucket is already occupied, overwrite it.

- There are a variety of ways of choosing which

of the old and new values to store, e.g.,

newest, oldest, minimum, maximum, deepest

search result.

- Though simple, this policy loses too much data

and hence is not used in practice.

- Chaining

- Make each bucket a list, and whenever data d

hashes to a bucket, add d to that bucket's

list.

- Allows a hash table to grow beyond its initial

size as necessary.

- If the hash function is uniform, each bucket's

list will be small and membership queries

will be as efficient as possible, i.e.,

query time = average list size.

- In worst case, could have one bucket with

all n hashed data items in that bucket's list,

giving O(n) query time.

- Open Addressing (OA)

- Requires that each data item have two

components k1 and k2 that can function as

lookup keys.

- General procedure for inserting data item

d whose k1 and k2 components have values

v1 and v2:

- If table location h(v1) is unoccupied,

store d there.

- If table location h(v1) is occupied and

its k2 component does not have value v2,

scan forward in table (possibly

repeatedly) in some fixed manner m until

an unoccupied location is found to

store d.

- General procedure for membership query on

data item d whose k1 and k2 components have

values v1 and v2:

- If table location h(v1) is unoccupied,

return false.

- If table location h(v1) is occupied and

its k2 component has value v2,

return true.

- Otherwise, scan forward in table (possibly

repeatedly) in some fixed manner m until

an unoccupied location is found (return

false), an occupied location is found

whose k2 component has value v2 (return

true), or all table locations have been

checked (return false).

- There are a variety of forward scanning

options, e.g.,

- linear probling (advance forward one

location at a time, i.e., scan interval

is 1)

- Can result in clustering of occupied

table locations.

- If few dense clusters in table, can

dramatically slow queries.

- quadratic probing (scan interval is

is quadratic function of d)

- double hashing (scan interval is

secondary hash of d)

Many other options exist, all seeking to

minimize clustering

- Coalesced hashing

- Combines chaining and OA by making

each bucket's list consist of other

buckets in the table.

- Superior memory performance to chaining by

itself as long as the number of stored

data items does not exceed the table sie.

- Coalesced Hashing

There is no universally-applicable hash table scheme --

rather, there are best (found to date) schemes for particular

applications, e.g., Zobrist hashing for game-state lists

in chess.

Friday, September 27

Tuesday, October 1 (Lecture #8)

[RN3, Chapter 4; Class Notes]

- Problem Solving and Search (Cont'd)

- Before we leave the topic of single-agent search, it is

worth looking at a class of search methods that are not

necessarily complete or optimal but are often used in

practice.

- Local search

- Useful for problems where the goal state is what is

wanted and the path of actions used to create that goal

is irrelevant, e.g., combinatorial optimization

problems like Maximum Clique:

Maximum Clique

Input: A graph G = (V,E).

Output: A maximum clique of G, i.e., a

largest-possible subset

V' of V such that each pair of vertices in V' is

connected by an edge in G.

- Assumes a problem state-space where each state s

has a "neighbourhood" N(s) of states, each of which are

reachable by applying a single action to s.

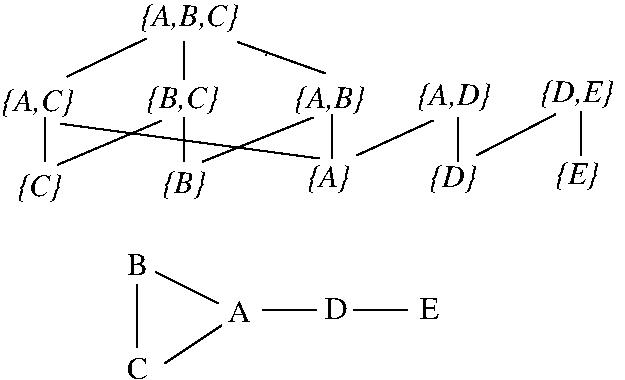

- EXAMPLE: The state space for the Maximum Clique

problem for a graph G.

The state space is the set of all subsets of vertices

of G that define clique-subgraphs, e.g., {A,B,C},

{D,E}, {E}. The neighbourhood of a state s is the

set of all states corresponding to those vertex-subsets

of G that induce clique-subgraphs and that can be

created by the addition or removal of a single vertex

to or from s, e.g., the neighbourhood of state {B,C}

is {{A}, {B}, {A,B,C}}.

- These neighbourhoods, when combined with a

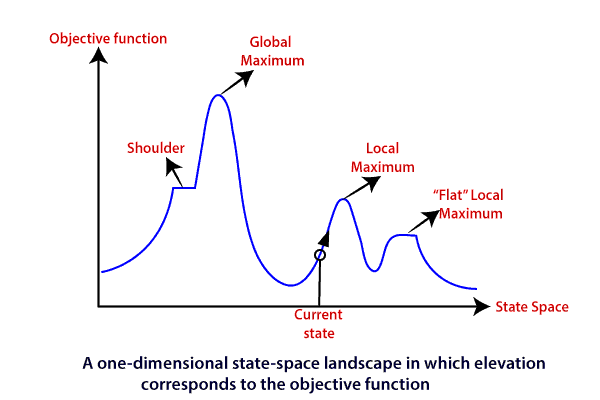

state-evaluation function, induce a state-space

landscape (Note that though the discussion and

algorithms below are phrased in terms of finding

maximum-value states on such landscapes, they can also

be rephrased in terms of finding minimum-value states,

e.g., (greedy) valley-descending search).

- EXAMPLE (RN3, Figure 4.1):

Useful features of such landscapes are:

- Global maximum: A state with the highest value

over all states in the state space.

- Local maximum: A state with the highest value

in that state's neighbourhood.

- Plateau: A set of adjacent states of the same value.

Can distinguish between local maximum plateau (all

states adjacent to the plateau have lower value

than the states in the plateau) and shoulder

plateau (some states adjacent to the plateau have

higher value than the states in the plateau).

Though global maxima are most desirable, local search

runs the (frequently unavoidable) risk of being trapped

in local maxima.

- Types of local search are distinguished by the methods

used to escape local maxima.

- Hill-climbing search (greedy search)

- This algorithm finds a local maximum, local

maximum plateau, or shoulder that is accesible

from the initial state.

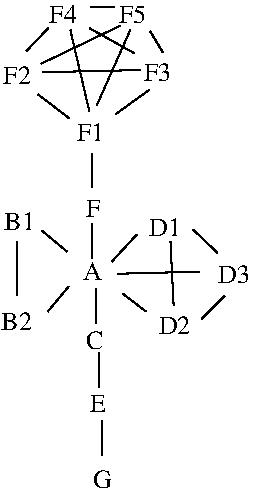

- EXAMPLE: Basic hill-climbing search for

finding (maximal) cliques.

In what ways can hill-climbing search proceed

from state {A} in the graph above? State

{F}? State {E}? State {D1}?

- Not complete or optimal in general but is

typically fast and very memory-efficient.

- Is complete and optimal if the

state-space landscape has a particular

"dome" structure (Cormen et al

(2009), Section 16.4), e.g.,

Prim's and Kruskal's algorithms for

finding minimum spanning trees.

- Variants:

- Limited sideways-move hill climbing.

- Allows sideways moves in hopes that

an encountered plateau is actually a

shoulder.

- Need to limit the number of sideways

moves so you do not go into an

infinite loop on an actual plateau.

- Stochastic hill-climbing.

- Chooses at random among uphill moves

with uphill move probability

proportional to degree of solution

improvement.

- First-choice hill-climbing.

- Chooses at random among uphill moves

with uphill move probability

proportional to degree of solution

improvement until either a move resulting

in a state with a value better than

that of the current state is found or

there are no uphill moves from the

current state, i.e., a plateau has

been reached..

- Random-restart hill climbing.

- Implements common dictum: "If at

first you don't succeed, try again."

- Performs hill-climbing repeatedly

from randomly-chosen start states

until goal is found.

- Is complete, as one will eventually

generate the goal state.

- If probability of attaining a goal

from a randomly-chosen start state

is p, the expected number of

required restarts is 1/p.

- Though all of these variants are slower

than basic hill-climbing search, they

can be remarkably effective in certain

state-space landscapes, e.g. allowing up

to 100 sideways moves in a hill-climbing

search for the 8-Queens problem

increases the percentage of solved

instances from 14% to 94%.

- Local beam search

- Unlike hill-climbing, which only has one

current state, local beam search keeps track

of the best k states found so far and performs

hill-climbing simultaneously on all of them.

- EXAMPLE: Basic local beam search for

finding (maximal) cliques.

- Is not equivalent to running k independent

random-restart hill-climbing searches in

parallel; in local beam search, useful

information is passed between searches and

resources are focused dynamically where

progress is being made, i.e., "No progress

there? Help me over here!".

- Not complete or optimal in general but is

typically fast and very memory-efficient.

- Variants:

- Stochastic local beam search

- Chooses k new states at random among

uphill moves from the k current

states with uphill move probability

proportional to degree of solution

improvement.

- Analogous to natural selection in

biological evolution.

- Local search and its variants are characterized by an

increasing use of computer time as a substitute for

hand-crafted search strategy (Tony Middleton's "cook

until done") -- as such, these methods are a first

taste of the machine-crafted algorithms we will be

looking at in the final lectures of this course.

- Potential exam questions

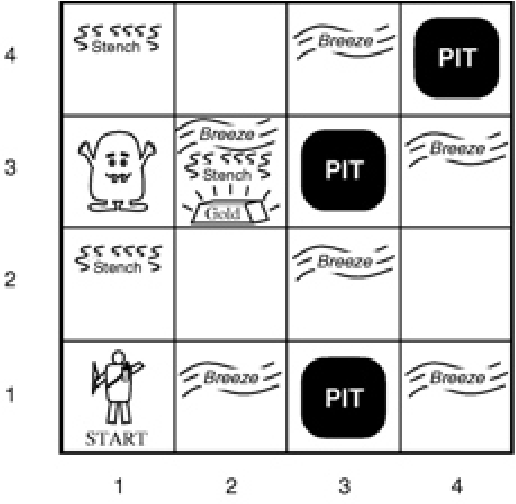

Thursday, October 3 (Lecture #9)

[DC3200, Lecture #10;

RN3, Chapter 5; Class Notes]

Lines modified from the MaxValue (one-level) algorithm

are indicated by "<=".

ALGORITHM: MaxValue and MinValue (full tree)

1. Function MaxValue(s) Function MinValue(s)

2. if leaf(s) if leaf(s)

3. return eval(s) return eval(s)

4. v = -infinity v = +infinity

5. for (c in children(s)) for (c in children(s))

6. v' = MinValue(c) v' = MaxValue(s)

7. if (v' > v) v = v' if (v' < v) v = v'

8. return v return v

Initial Call: MaxValue(startState)

eval(s) returns score wrt the current (maximizing) player.

ALGORITHM: MaxValue and MinValue (limited-depth)

1. Function MaxValue(s, d) Function MinValue(s, d) <=

2. if (leaf(s) or d >= MaxD) if (leaf(s) or d >= MaxD) <=

3. return eval(s) return eval(s)

4. v = -infinity v = +infinity

5. for (c in children(s)) for (c in children(s))

6. v' = MinValue(c, d+1) v' = MaxValue(c, d+1) <=

7. if (v' > v) v = v' if (v' < v) v = v'

8. return v return v

Initial Call: MaxValue(startState, 0)

Lines modified from the MaxValue and MinValue (full tree)

algorithms are indicated by "<=".

ALGORITHM: MiniMax search (depth-limited)

1. Function MiniMax(s, d, isMax)

2. if (leaf(s) or d >= MaxD)

3. return eval(s)

4. if (isMax)

5. v = -infinity

6. for (c in children(s))

7. v = max(v, MiniMax(c, d+1, false))

8. else

9. v = +infinity

10. for (c in children(s))

11. v = min(v, MiniMax(c, d+1, true))

12. return v

Initial call: MiniMax(startState, 0, true)

One final (and trivial) change is required to ensure

that the best action for the current (max) player to take is

stored in the start state.

ALGORITHM: MiniMax search (depth-limited) + saved best action [ASSIGNMENT #2]

1. Function MiniMax(s, d, isMax)

2. if (leaf(s) or d >= MaxD)

3. return eval(s)

4. if (isMax)

5. v = -infinity

6. for (c in children(s))

7. v' = MiniMax(c, d+1, false) <=

8. if (v' > v) <=

9. v = v' <=

10. if (d == 0) self.currentBestAction = c.action <=

11. else

12. v = +infinity

13. for (c in children(s))

14. v = min(v, MiniMax(c, d+1, true))

15. return v

Initial call: MiniMax(startState, 0, true)

INTERLUDE: A look at the mechanics of

Assignment #2.

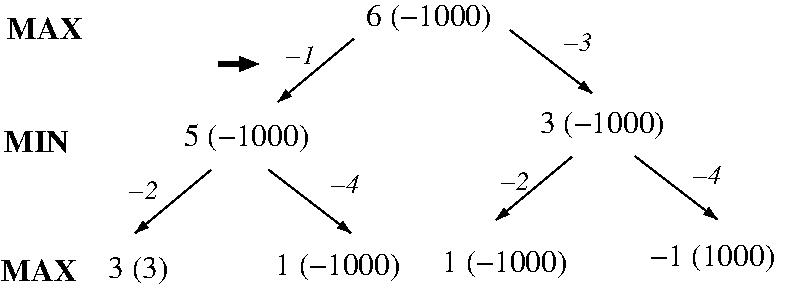

EXAMPLE: MiniMax generation and evaluation of an

altNim game tree from Assignment #2 (maxD = 2, i.e.,

two-move lookahead / startOdd = True / # given sticks = 6)

(see Test #3 in Assignment #2).

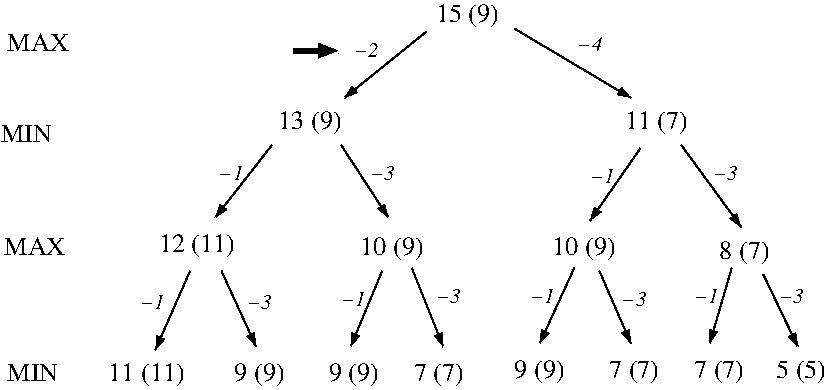

EXAMPLE: MiniMax generation and evaluation of an

altNim game tree from Assignment #2 (maxD = 3, i.e.,

three-move lookahead / startOdd = False / # given

sticks = 15) (see Test #6 in Assignment #2).

Potential exam questions

Tuesday, October 8

Thursday, October 10

- No lecture; class cancelled

Tuesday, October 15

- No lecture; midterm break

Thursday, October 17

- No lecture; class cancelled

Tuesday, October 22 (Lecture #10)

[DC3200, Lecture #10;

RN3, Chapters 5 and 6; Class Notes]

- Went over In-class exam # 1.

- Problem Solving and Search (Cont'd)

- Given that max(a, b) = -max(-a, -b), we can shorten the

MiniMax algorithm above to create the NegaMax algorithm.

- NegaMax is harder to understand and debug but it is the

version of MiniMax that is used in practice.

- ALGORITHM: NegaMax search (depth-limited)

1. Function NegaMax(s, d, isMax)

2. if (leaf(s) or d >= MaxD)

3. return eval(s)

5. v = -infinity

6. for (c in children(s))

7. v = max(v, -NegaMax(c, d+1, false))

12. return v

Initial call: NegaMax(startState, 0, true)

- Performance of MiniMax search

- MiniMax is complete wrt given maximum depth MaxD

- MiniMax is optimal wrt given maximum depth MaxD

- Time Complexity: O(b^{MaxD})

- Space Complexity: O(b * MaxD)

- MiniMax plays the Nash Equilibrium, i.e., each player

makes the best response to the possible actions of

the other player and thus neither player can gain by

deviating from the moves proposed by MiniMax.

- A closer examination of the intermediate results created

at the internal game tree nodes during MiniMax search

reveals that these results can be used to prune parts

of the game tree that cannot yield solutions better than

those that have been found so far.

- EXAMPLE: MiniMax + pruning (one move each player)

[DC3200, Lecture #10, Slides 40-50

(PDF)]

- This optimization of MiniMax is known as AlphaBeta pruning.

- Introduces two new MiniMax parameters:

- alpha: Best alternative for the Max player on

this particular path through the game tree.

- beta: Best alternative for the Min player on

this particular path through the game tree.

- The interval [alpha, beta] is an ever-narowing window

as MiniMax search progresses which ``good'' paths

must pass through and thus allows other paths to be

pruned.

- ALGORITHM: MaxValue and MinValue (limited-depth + AlphaBeta)

1. Function MaxValue(s, a, b, d) Function MinValue(s, a, b, d) <=

2. if (leaf(s) or d >= MaxD) if (leaf(s) or d >= MaxD)

3. return eval(s) return eval(s)

4. v = -infinity v = +infinity

5. for (c in children(s)) for (c in children(s))

6. v' = MinValue(c, a, b, d+1) v' = MaxValue(c, a, b, d+1) <=

7. if (v' > v) v = v' if (v' < v) v = v'

8. if (v' >= b) return v if (v' <= a) return v <=

9. if (v' > a) a = vi if (v' < b) b = v' <=

10. return v return v

Initial Call: MaxValue(startState, -infinity, +infinity, 0)

Lines modified from the MaxValue and MinValue (limited-depth)

algorithms are indicated by "<=".

EXAMPLE: MaxValue + AlphaBeta pruning (two moves each player)

[DC3200, Lecture #10, Slides 56-110

(PDF)]

ALGORITHM: MiniMax search (depth-limited + AlphaBeta)

1. Function MiniMax(s, a, b, d, isMax) <=

2. if (leaf(s) or d >= MaxD)

3. return eval(s)

4. if (isMax)

5. v = -infinity

6. for (c in children(s))

7. v' = max(v, MiniMax(c, a, b, d+1, false)) <=

8. if (v' > v) v = v' <=

9. if (v' > b) return v <=

10. if (v' > a) a = v' <=

11. else

12. v = +infinity

13. for (c in children(s))

14. v' = min(v, MiniMax(c, a, b, d+1, true)) <=

15. if (v' < v) v = v' <=

16. if (v' <= a) return v <=

17. if (v' < b) b = v' <=

18. return v

Initial call: MiniMax(startState, -infinity, +infinity, 0, true)

Lines modified from the MiniMax (limited-depth)

algorithm are indicated by "<=".

One final (and trivial) change is required to ensure

that the best action for the current (max) player to take is

stored in the start state.

ALGORITHM: MiniMax search (depth-limited + AlphaBeta + saved

best action)

1. Function MiniMax(s, a, b, d, isMax)

2. if (leaf(s) or d >= MaxD)

3. return eval(s)

4. if (isMax)

5. v = -infinity

6. for (c in children(s))

7. v' = max(v, MiniMax(c, a, b, d+1, false))

8. if (v' > v) v = v'

9. if (v' > b) return v

10. if (v' > a) <=

11. a = v <=

12. if (d == 0) self.currentBestAction = c.action <=

13. else

14. v = +infinity

15. for (c in children(s))

16. v' = min(v, MiniMax(c, a, b, d+1, true))

17. if (v' < v) v = v'

18. if (v' <= a) return v

19. if (v' < b) b = v'

20. return v

Initial call: MiniMax(startState, -infinity, +infinity, 0, true)

Lines modified from the MiniMax (limited-depth + AlphaBeta)

algorithm are indicated by "<=".

AlphaBeta pruning cuts the number of nodes searched by

MiniMax from O(b^d) to approximately 2b^{d/2} (which

can be proven to be the best possible). This allows

searches to depth d under MiniMax with a fixed amount

of computational resources to go to depth 2d under

MiniMax + AlphaBeta with the same resources, e.g.,

a previously bad gameplaying program can be enhanced to

beat a human world champion!

The MiniMax + AlphaBeta algorithm can be shortened

by rearranging the code above to be more

efficient; a runtime limit can also be enforced by

using the iterative deepening strrategy previously

applied to Depth-First Search in Lecture #5 (see

Lecture #10 of DC3200 for details).

Potential exam questions

- In most of our our previously discussed search algorithms

(cf. local search), our primary concern when solving

problems was more with sequences of actions that resulted

in goal states rather than the goal states themselves.

- Are there other types of search algorithms for problems

where the goal states are the primary concern?

- Let us focus on Constraint Satisfaction Problems

(CSPs).

- In a CSP, states have factored representations (see

Lecture #3) that are defined by a set of variables

X = {X1, X2, ..., Xn}, each of which has a value from

some variable-specific domain Di, and a set C of

constraints on the values of those variables.

- A constraint is described by a pair <(scope),

rel> where scope is a tuple of

variables from X and rel is a relation specifying

the values of the variables in rel allowed

by the constraint.

- The number or variables in scope determines

that constraint's arity (e.g., 1 = unary, 2 = binary,

3 = trinary, m = m-ary where m is arbitrary (global

constraint)).

- There are typically several ways to specify a

particular variable relation.

- EXAMPLE: We can specify the binary constraint on

variables X1 and X2 with common domain {A,B} that X1

and X2 have different values as <(X1,X2),[(A,B),(B,A)]>

or <(X1,X2), X1 \neq X2>.

- Each state in the state-space of a CSP specifies an

assignment of values [X1 = v1, X2 = v2, ..., Xn = vn]

to the variables in the CSP.

- A value-assignment that gives a value to each variable

in a CSP is said to be complete; if one or

more variables are not assigned values, the

value-assignment is partial.

- A value-assignment that satisfies all given constraints

in C for a CSP is said to be consistent; if one

or more constraints in C are not satisfed, the

value-assignment is inconsistent..

- A solution (goal state) for a CSP is a complete

consistent value-assignment for that CSP.

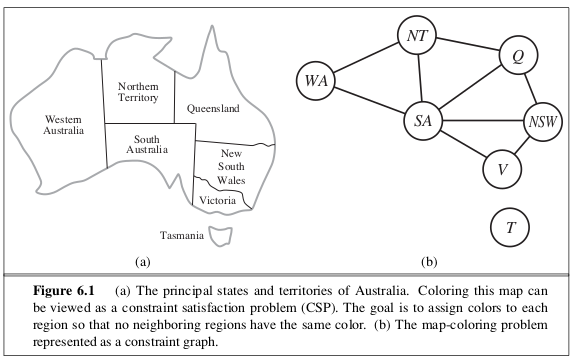

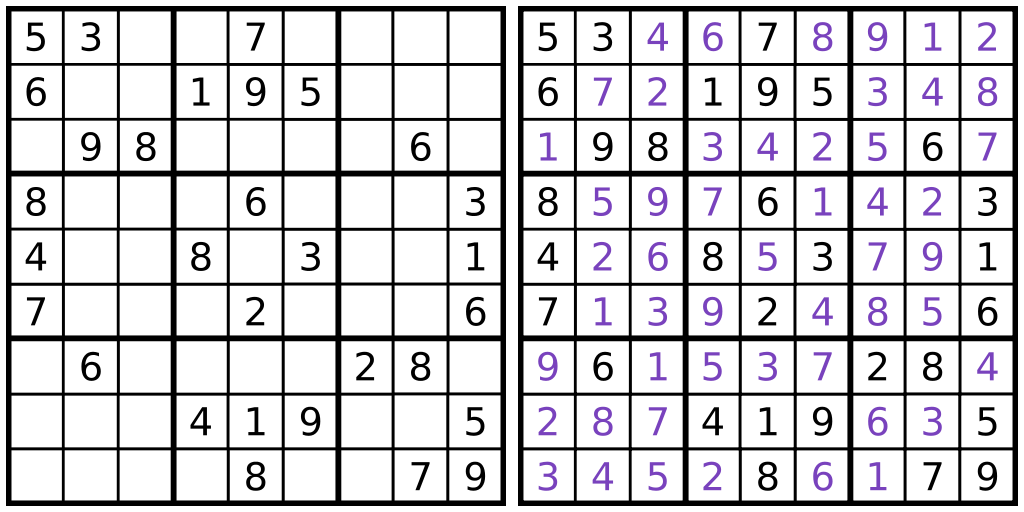

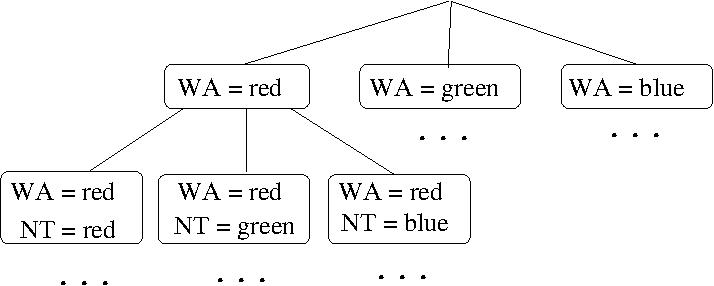

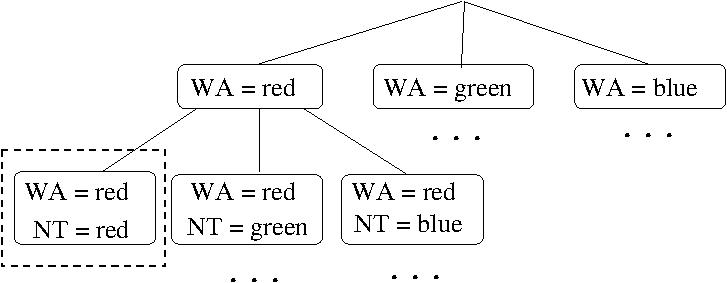

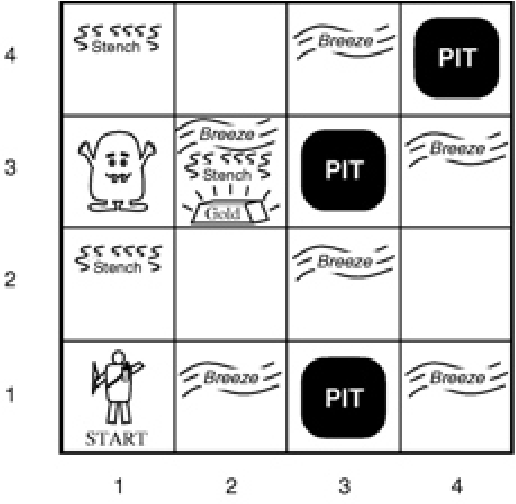

- EXAMPLE: A CSP for coloring a map of Australia.

- In map coloring, we want to assign colors to the

regions in the map such that no two adjacent regions

have the same color.

- Coloring a map of Australia (part (a)) can be modeled as

a CSP with variables X = {WA, NT, Q, NSW, V, SA, T}

over the common domain D = {red, green, blue} and

the binary constraints C = {SA \neq WA, SA \neq NT,

SA \neq Q, SA \neq NSW, SA \neq V, WA \neq NT, NT \neq

Q, Q \neq NSW, NSW \neq V} (where X \neq Y is

shorthand for the constraint <(X,Y) X \neq Y>)..

- Such a CSP with all binary constraints can be modeled

as a constraint graph (part (b)), which is an

undirected graph whose vertices correspond to variables

and edges correspond to constraints.

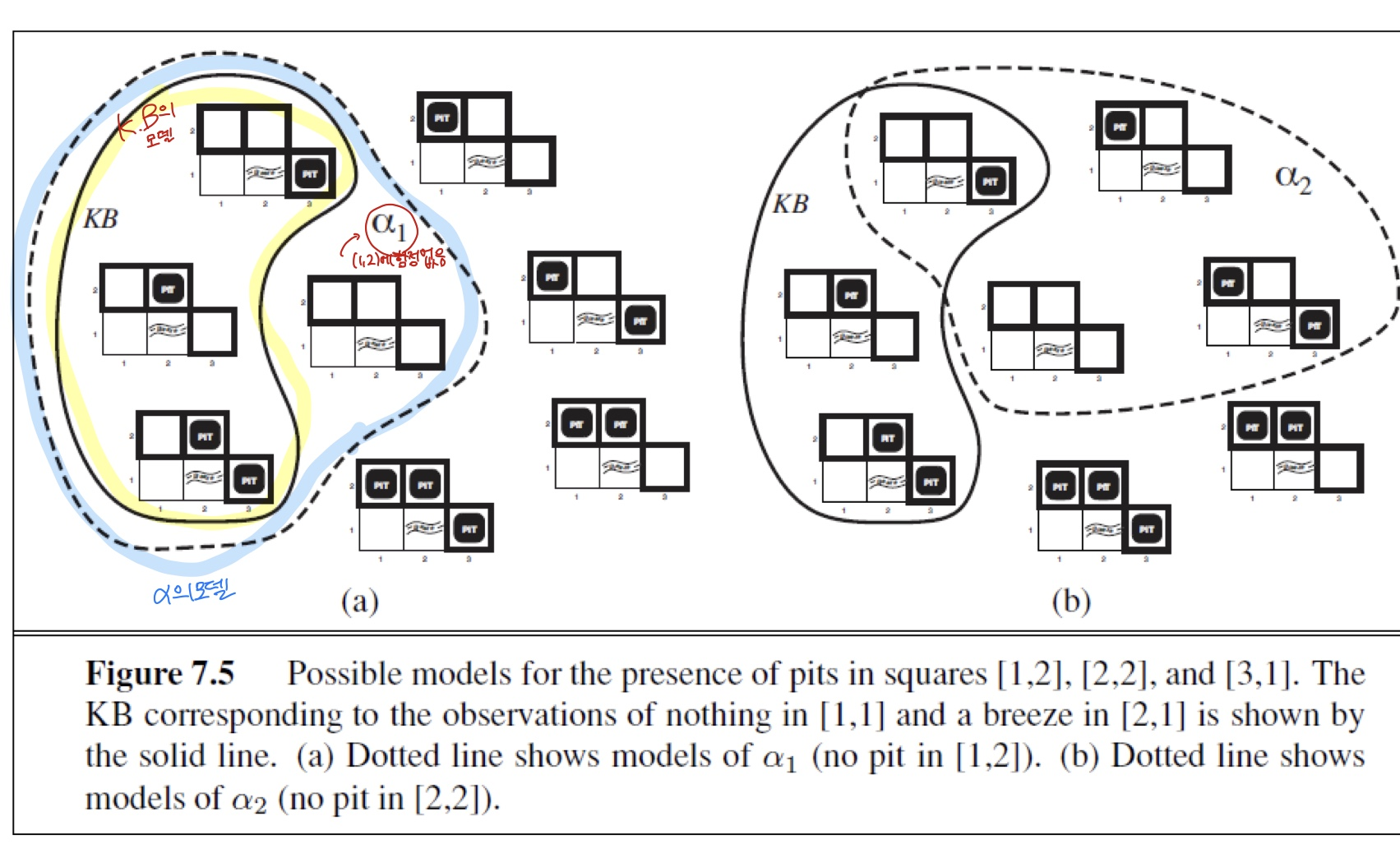

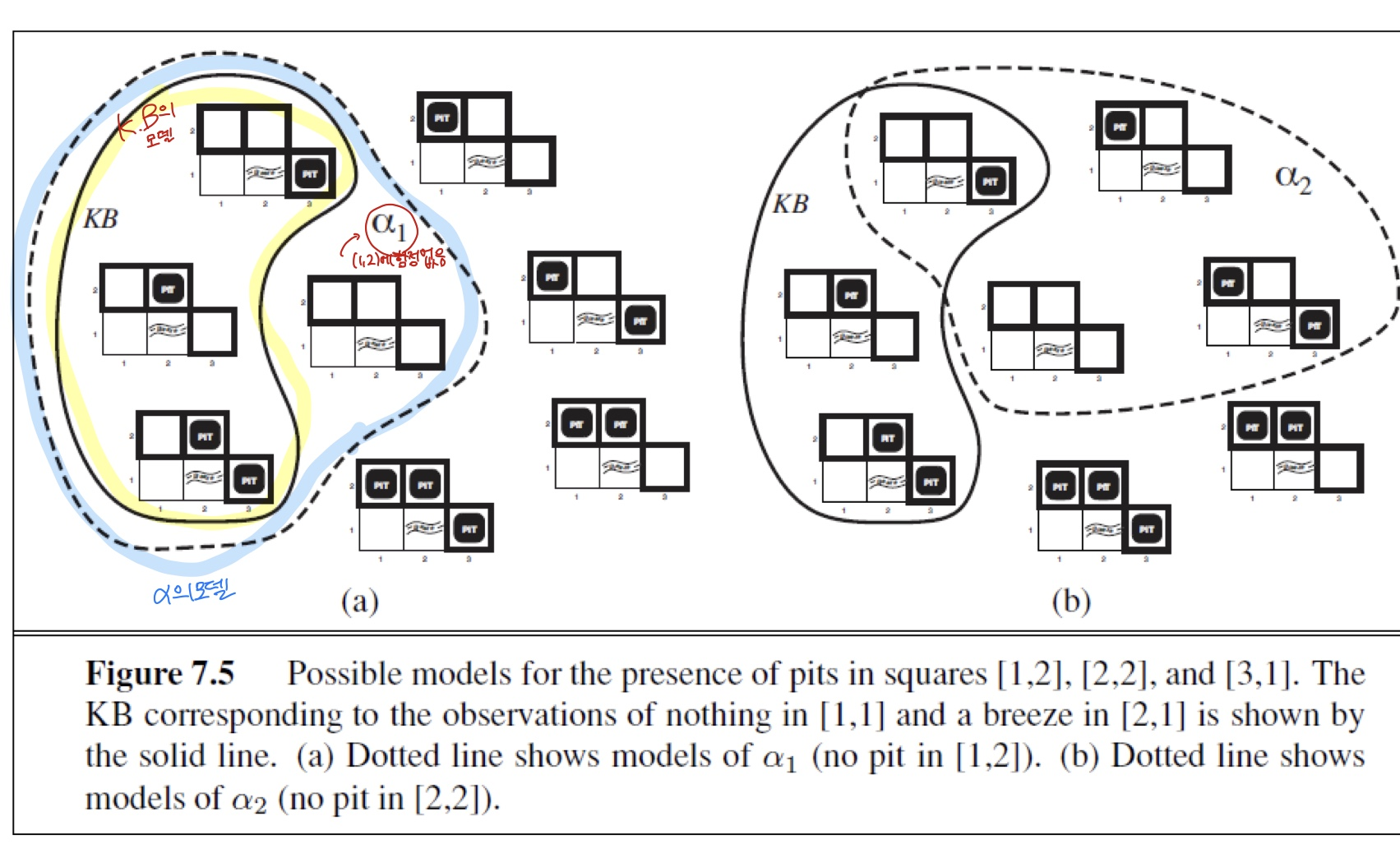

- CSPs with m-ary constraints, m >= 2, can be